RapidMiner Tutorials

It would appear that making a rolling list of all my old and newer RapidMiner Tutorials would be helpful for readers. It should be noted that these tutorials and videos were made for different versions of RapidMiner (versions 5 to 7) and may look a bit old and dated BUT they are still relevant to this day. The functionality of RapidMiner is still the same but the internal organization and look will be different. Most of the operators are the same name but things like the Time Series plugin have been fully incorporated into RapidMiner Studio now.

This version was the last true open-source version of RapidMiner and was a massive achievement by the team back in 2010. I remember when it came out, it looked awesome and was really powerful for that time. RapidMiner has gone through many changes since then, most notably applying an 'open core' type of licensing model where RapidMiner Studio is free to use for only 10,000 rows of data.

I'm a big proponent of open source but I completely understand the need to make money and satisfy the venture capitalists. The real trick is how to thread the needle between what you give away for free (showing the leg) and what you sell (getting them to buy you dinner). For now, the Red Hat model of open source seems to be winning in the marketplace. Take open-source Linux and build enterprise components around it. You sell the enterprise components. That seems to work for Cloudera too but they made some big missteps, so just because a technology is open source (aka free) you can sell mess things up.

So without further ado, here's a list of nearly all the videos and tutorials I've made. I will add to this list as pull together more of the scattered videos.

Build an AI Finance Model in RapidMiner

Before you can begin building your own AI Financial Market Model (machine-learned), you have to decide on what software to use. Since I wrote this article in 2007, many new advances have been made in machine learning. Notably the Python module Scikit Learn came out and Hadoop was released into the wild.

I’m not overly skilled in coding and programming – I know enough to get by - I settled on RapidMiner. RapidMiner is a very simple visual programming platform that lets you drag and drop “operators” into a design canvas. Each operator has a specific type of task related to ETL, modeling, scoring, and extending the features of RapidMiner.

There is a slight learning curve but, it’s not hard to learn if you follow along with this tutorial!

The AI Financial Market Model

First download RapidMiner Studio and then get your market data (OHLCV prices), merge them together, transform the dates, figure out the trends, and so forth. Originally these tutorials built a simple classification type of model that look to see if your trend was classified as being in an “up-trend” or a “down-trend.” The fallacy was they didn’t take into account the time series nature of the market data and the resulting model was pretty bad.

For this revised tutorial we’re going to do a few things.

- Install the Finance and Economics, and Series Extensions

- Select the S&P500 weekly OHLCV data for a range of 5 years. We’ll visualize the closing prices and auto-generate a trend label (i.e. Up or Down)

- We’ll add in other market securities (i.e. Gold, Bonds, etc) and see if we can do some feature selection

- Then we’ll build a forecasting model using some of the new H20.ai algorithms included in RapidMiner v7.2

All processes will be shared and included in these tutorials. I welcome your feedback and comments.

The Data

We’re going to use the adjusted closing prices of the S&P500, 10 Year Bond Yield, and the Philadelphia Gold Index from September 30, 2011 through September 20, 2016.

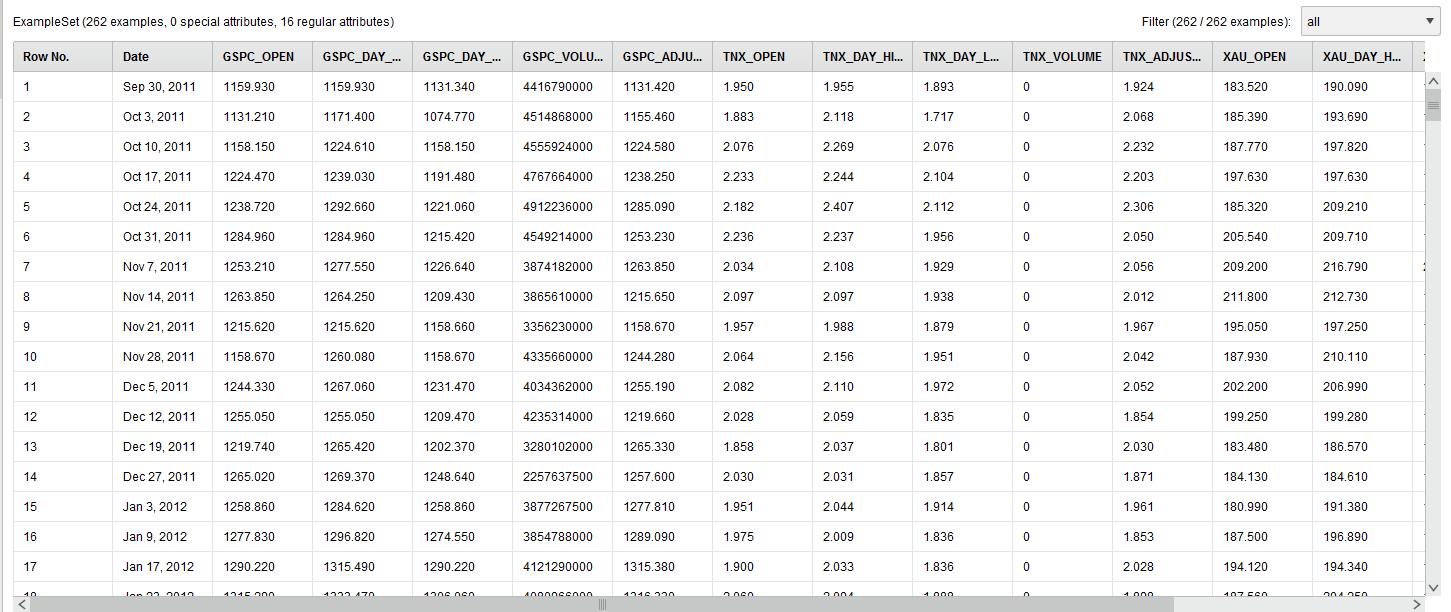

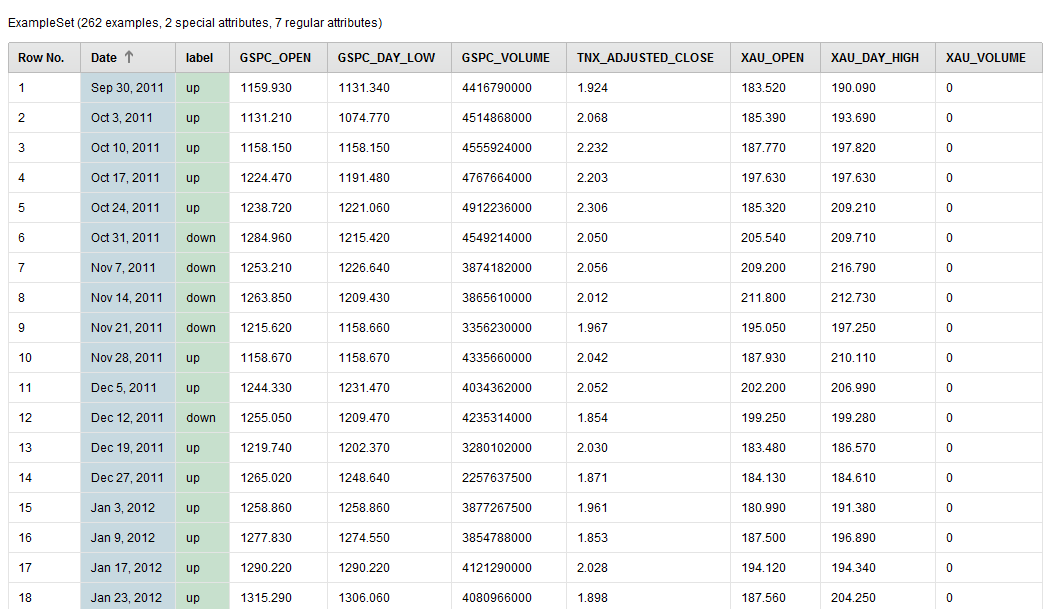

The raw data looks like this:

We renamed the columns (attributes) humanely by removing the “^” character from the stock symbols.

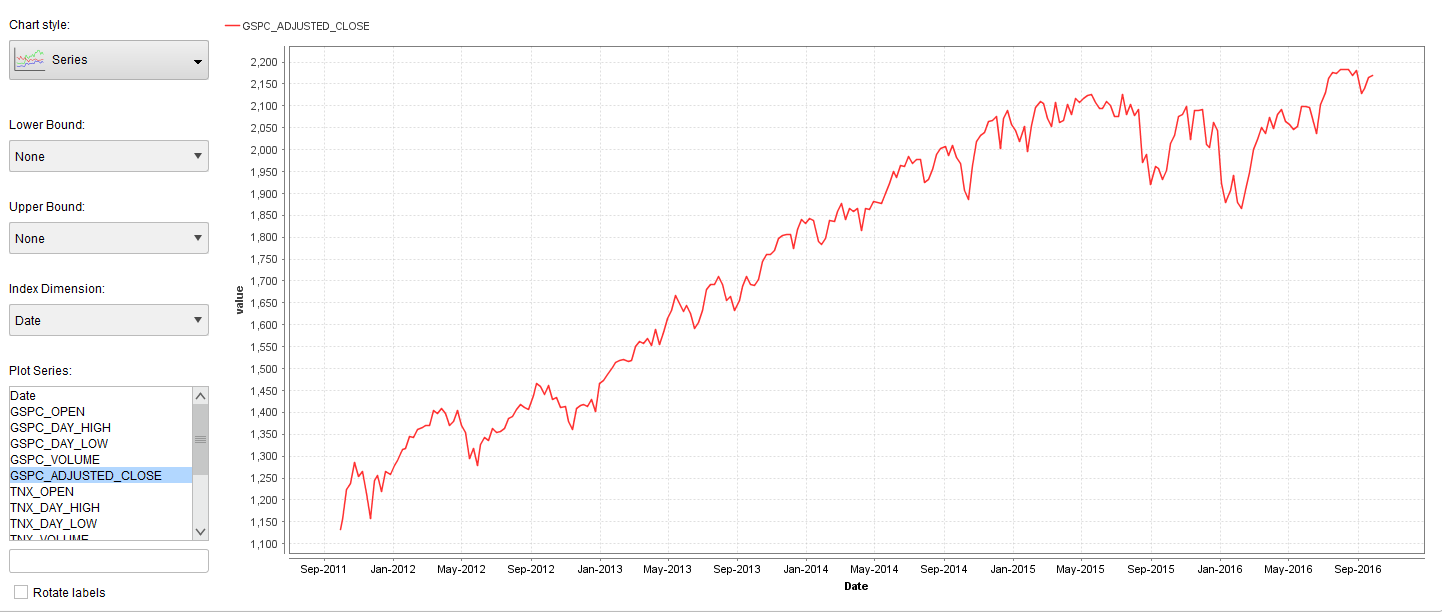

Next we visualized the adjusted weekly closing price of the S&P500 using the built in visualization tools of RapidMiner.

The next step will be to transform the S&P500 adjusted closing price into Up and Downtrend labels. To automatically do this we have to install the RapidMiner Series Extension and use the Classify by Trend operator. The Classify by Trend operator can only work if you set the SP500_Adjusted_Close column (attribute) as a Label role.

The Label role in RapidMiner is your target variable. In RapidMiner all data columns come in as “Regular” roles and a “Label” role is considered a special role. It’s special in the sense that it’s what you want the machine-learned model to learn. To achieve this you’ll use the Set Role operator. In the sample process I share below I also set the Date to the ID role. The ID role is just like a primary key, it’s useful when looking up records but doesn’t get built into the model.

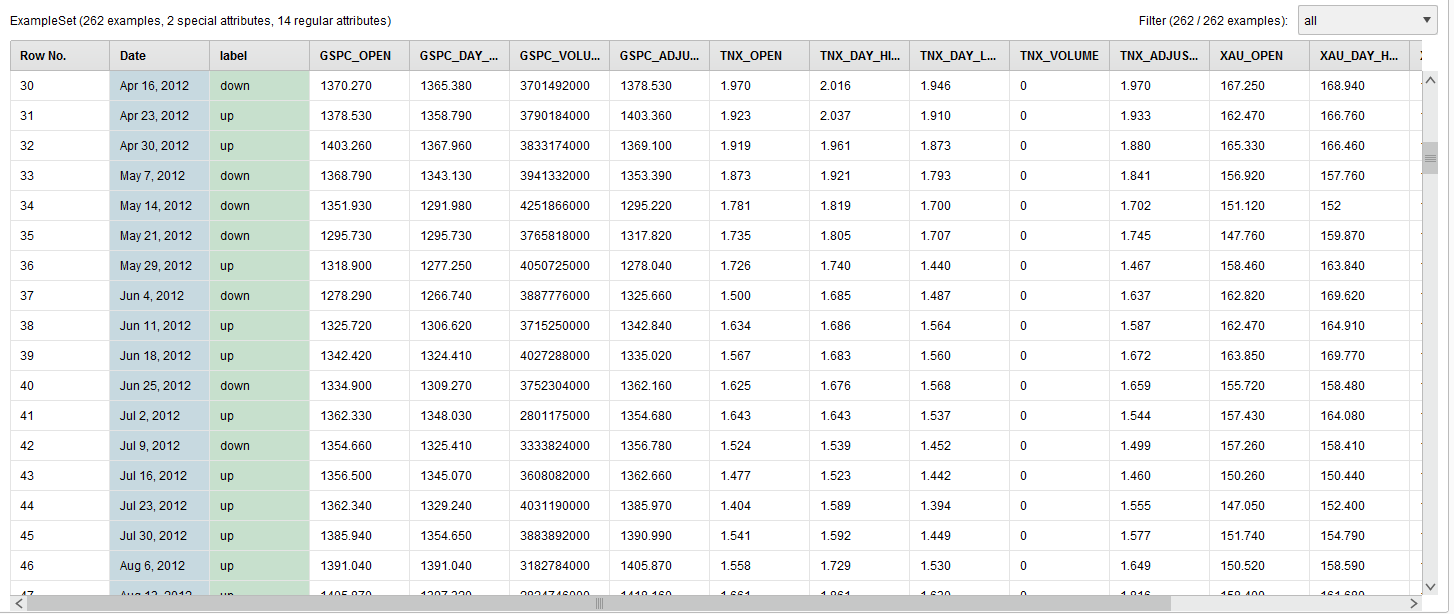

The final data transformation looks like this:

The GSPC_Adjusted_Close column is now transformed and renamed to the label column.

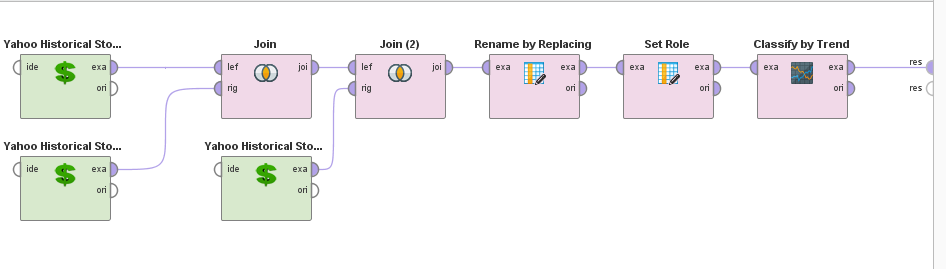

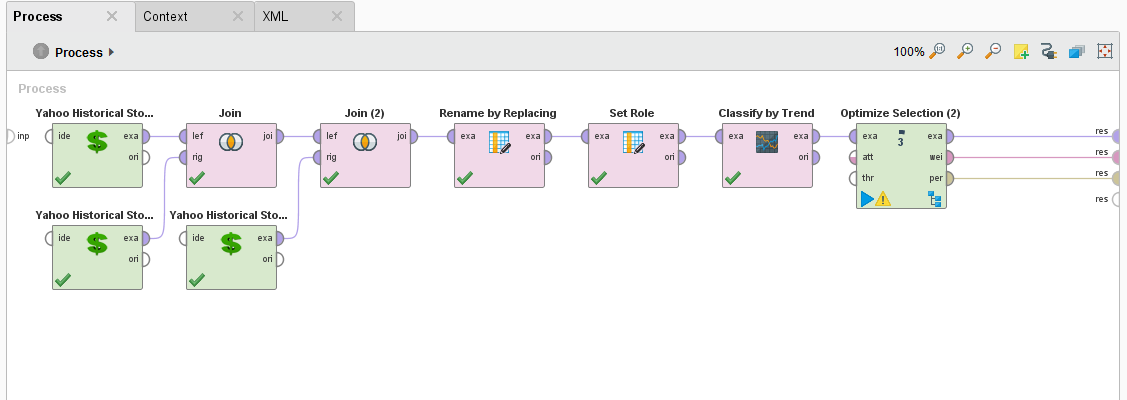

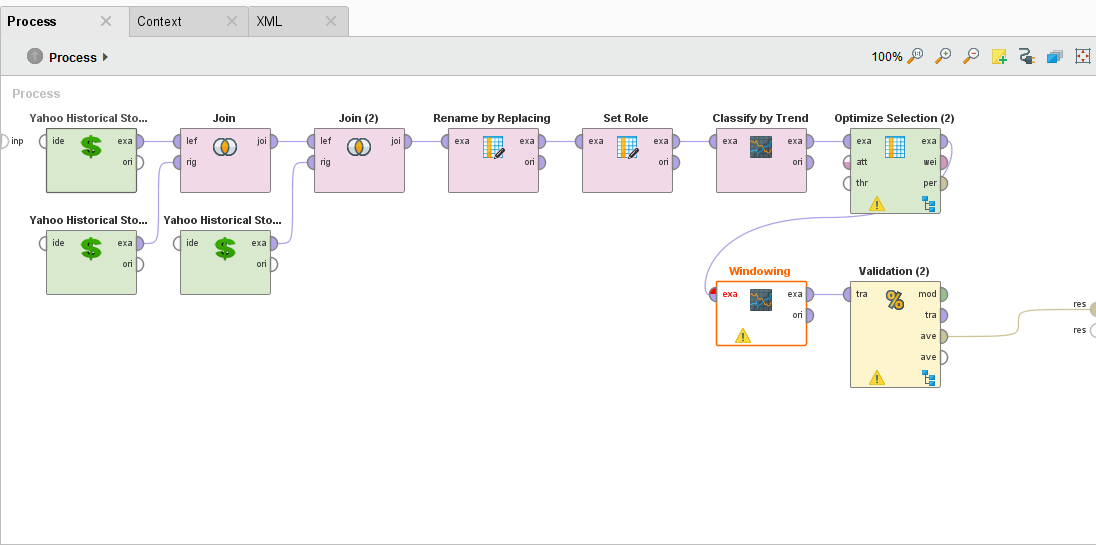

The resulting process looks like this:

Next, I want to show you how to use MultiObjective Feature Selection (MOFS) in RapidMiner**. **It’s a great technique to simultaneously reduce your attribute set and maximize your performance (hence: MultiObjective). This feature selection process can be run over and over again for your AI Financial Market Model, should it begin to drift.

Load in the Process

Start by reading the Building an AI Financial Market Model – Lesson 1 post. At the bottom of that post, you can download the RapidMiner process.

Add an Optimize Selection (Evolutionary) operator

The data that we pass through the process contains the adjusted closing prices of the S&P500, 10 Year Bond Yield, and the Philadelphia Gold. Feature Selection lets us choose which one of these attributes contributes the most to the overall model performance, and which really doesn’t matter at all.

To do that, we need to add an Optimize Selection (Evolutionary) operator.

Why do you want to do MultiObjective Feature Selection? There are many reasons but most important of all is that a smaller data set increases your training time by reducing consumption of your computer resources.

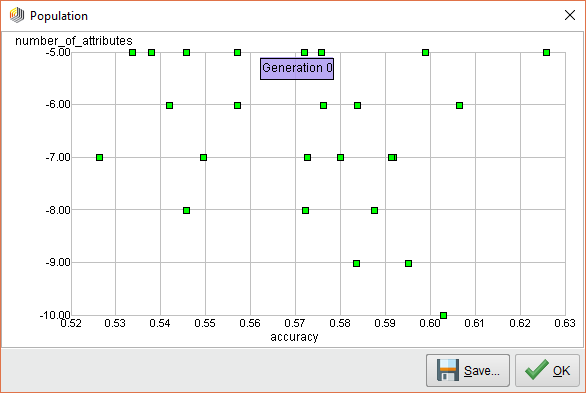

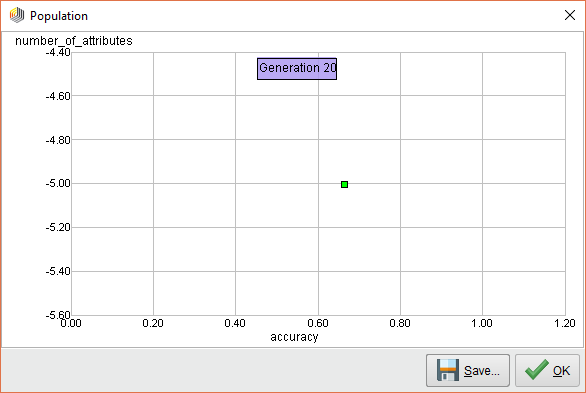

When we execute this process, you can see that the Optimize Selection (Evolutionary) operator starts evaluating each attribute. At first, it measures the performance of ALL attributes and it looks like it’s all over the map.

{{< img src="ai2-generation0.png" alt="RapidMiner AI Feature Generation 0" >}}

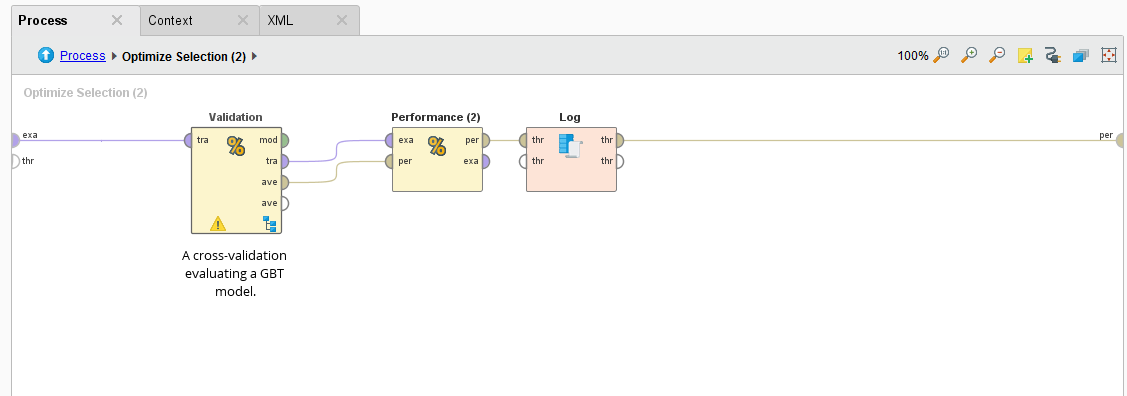

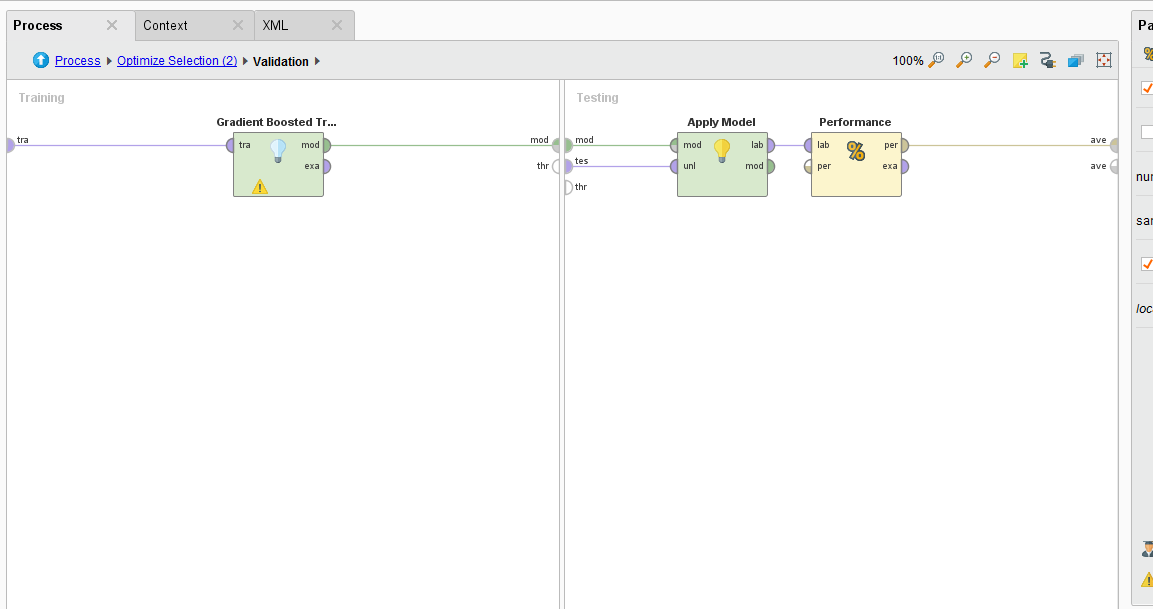

How it measures the performance is with a Cross Validation operator embedded inside the subprocess.

{{< img src="ai2-crossvalidation.png" alt="RapidMiner Cross Validation" >}}

{{< img src="ai2-GBT.png" alt="H2O Gradient Boosted Trees" >}}

The Cross Validation operator use a Gradient Boosted Tree algorithm to analyze the permutated inputs and measures their performance in an iterative manner. Attributes are removed if they don’t provide an increase in performance.

{{< img src="ai2-20generations.png" alt="RapidMiner AI Feature Generation 20" >}}

MultiObjective Feature Selection Results

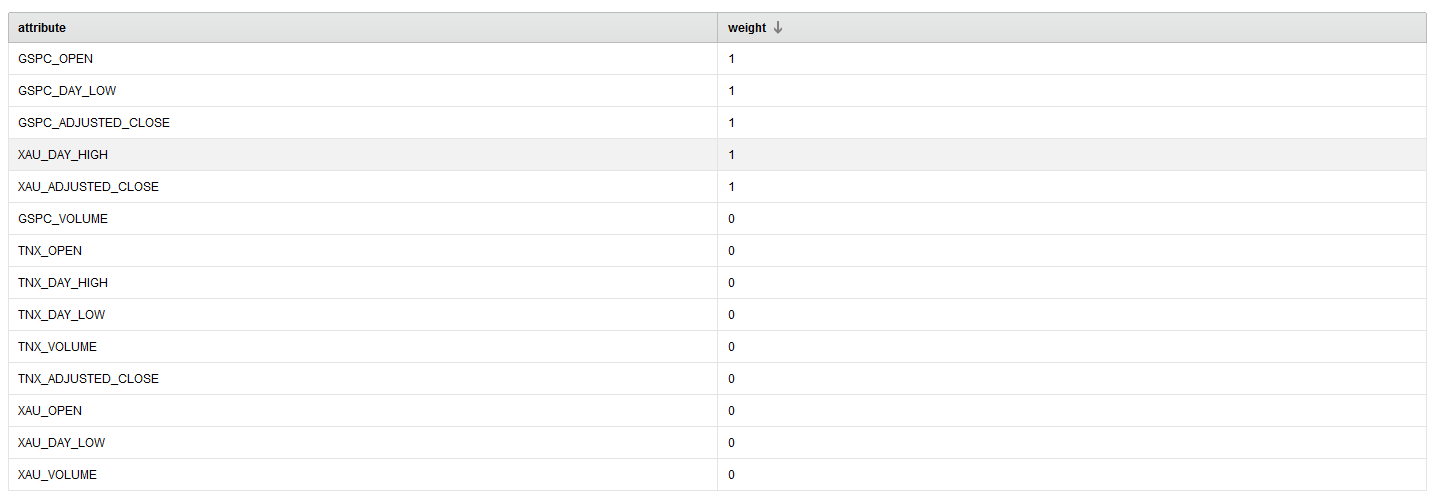

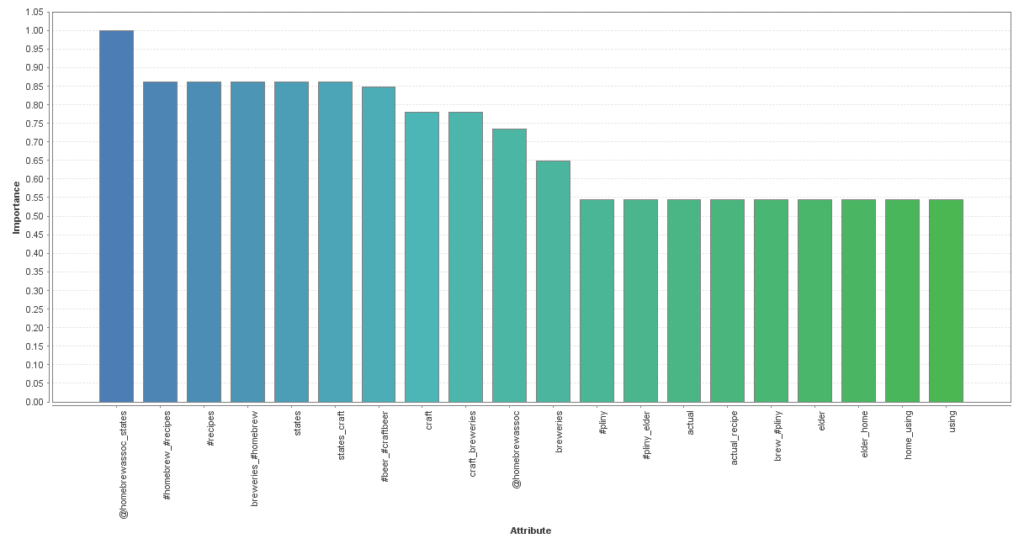

From running this process, we see that the following attributes provide the best performance over 25 iterations.

{{< img src="ai2-SelectedFeatures.png" alt="RapidMiner Selected Features" >}}

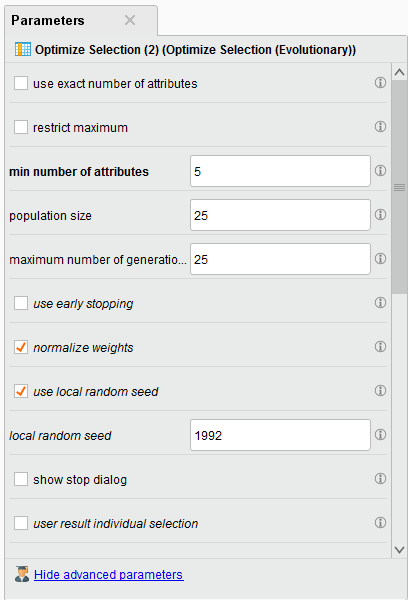

{{< img src="ai2-parameters.png" alt="Hyperparameters" >}}

Note: We choose to have a minimum of 5 attributes returned in the parameter configuration. The selected ones have a weight of 1.

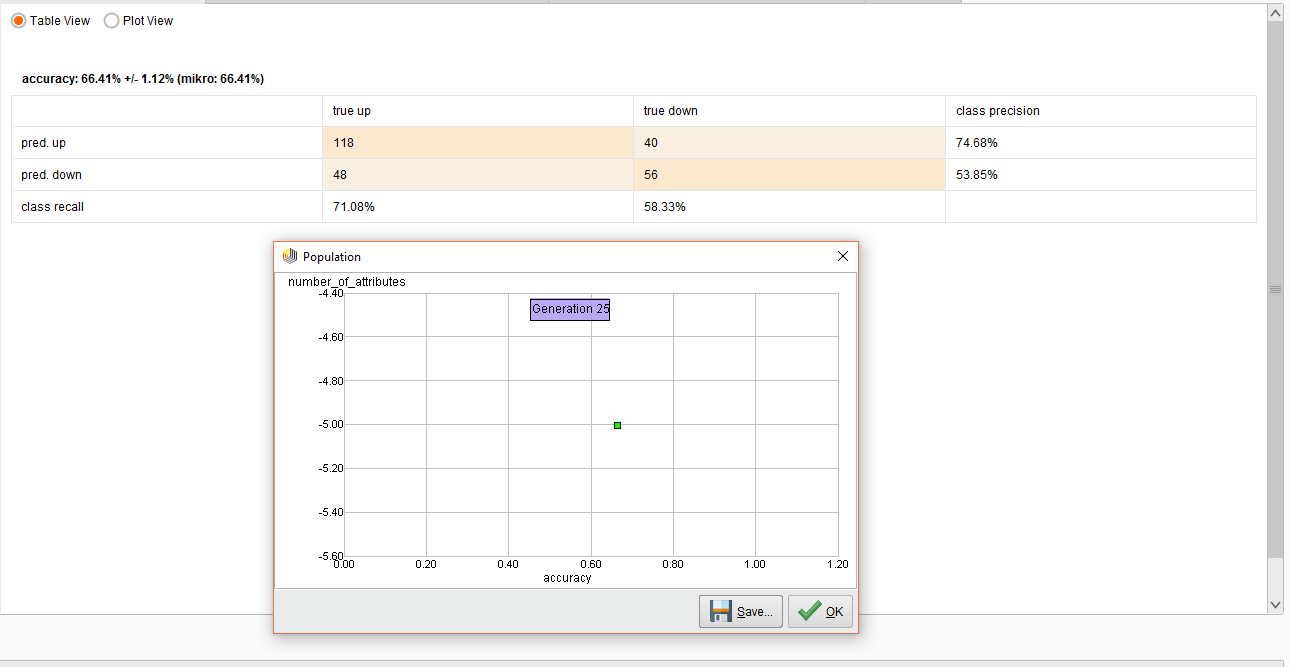

The resulting performance for this work is below.

{{< img src="ai2-Performance.png" alt="Experiment Performance" >}}

The overall accuracy was 66%. In the end, predicting and UP trend was pretty decent, but not so good for the DOWN trend.

The possible reason for this poor performance is that I purposely made a mistake here. I used a Cross Validation operator instead of using a Sliding Window Validation operator.

The Sliding Window Validation operator is used to backtest and train a time series model in RapidMiner and we’ll explain the concepts of Windowing and Sliding Window Validation in the next Lesson.

Note: You can use the above method of MultiObjective Feature Selection for both time series and standard classification tasks.

Above, I went over the concept of MultiObjective Feature Selection (MOFS). In this lesson we’ll build on MOFS for our model but we’ll forecast the trend and measure it’s accuracy.

Revisiting MOFS

We learned in lesson 2 that RapidMiner can simultaneously select the best features in your data set while maximizing the performance. We ran the process and the best features were selected below.

{{< img src="MOFS-List.png" alt="MultiObjective Feature Selection" >}}

From here we want to feed the data into three new operators that are part of the Series Extension. We will be using the Windowing, Sliding Window Validation, and the Forecasting Performance operator.

These there operators are key to measuring the performance of your time series model. RapidMiner is really good and determining the directional accuracy of time series and is a bit rough when it comes to point forecasts. My personal observation is that it’s futile to get a point forecast for an asset price, you have better luck with direction and volatility.

Our forecasting model will use a Support Vector Machine and an RBF kernel. Time series appear to benefit from this combination and you can always check out this link for more info.

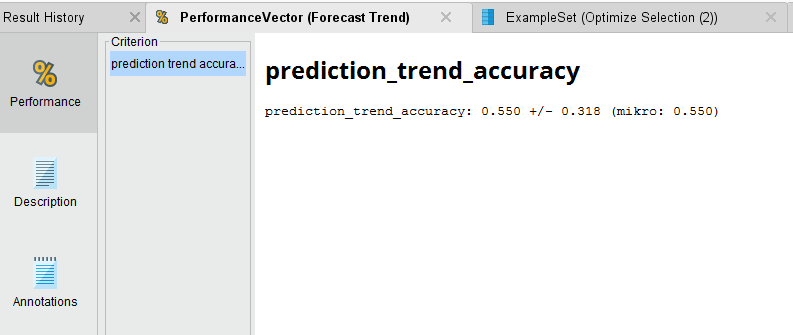

Forecast Trend Accuracy

{{< img src="wForecast-Trend-Accuracy.png" alt="Forecast Trend Accuracy" >}}

The Process

{{< img src="Process.png" alt="Forecast Trend Accuracy Process" >}}

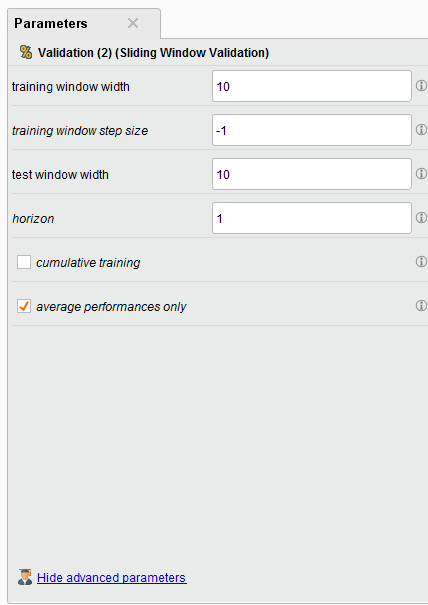

Sliding Window Validation Parameters

{{< img src="Sliding-Window-parameters.png" alt="Sliding Window Parameters" >}}

Windowing the Data

RapidMiner allows you to do multivariate time series analysis also known as a model-driven approach to analysis. This is different than a data driven approach, such as ARIMA, and allows you to use many different inputs to make a forecast. Of course, this means that point forecasting becomes very difficult when you have multiple inputs, but makes directional forecast more robust.

The model-driven approach in RapidMiner requires you to Window your Data. To do that you’ll need to use the Window operator. This operator is often misunderstood, so I suggest you read my post in the community on how it works.

Tip: Another great reference on using RapidMiner for time series is here.

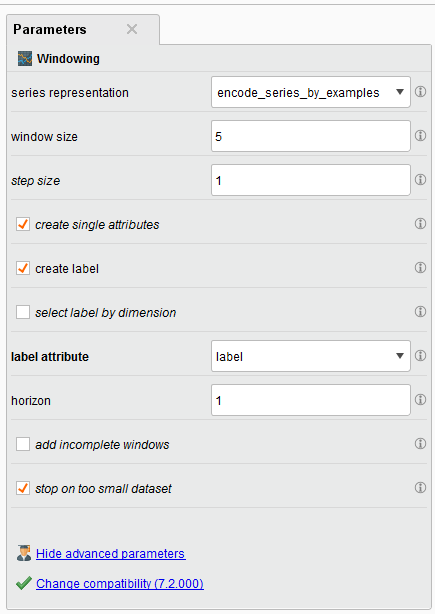

There are key parameters that you should be aware of especially the window size, the step size, whether or not you create a label, and the horizon.

{{< img src="Window-Parameters.png" alt="Optimization parameters" >}}

When it comes to time series for the stock market, I usually choose a value of 5 for my window. This can be before 5 days, if your data is daily, or 5 weeks if it’s weekly. You can choose what you think is best.

The Step Size parameter tells the Windowing operator to create a new window with the next example row it encounters. If it was set to two, then it will move two examples ahead and make a new window.

Tip: The Series Representation parameter is defaulted to “encode_series_by_examples.” You should leave this default if your time series data is row by row. If a new value of your time series data is in a new column (e.g. many columns and one row), then you should change it to “encode_series_by_attributes.”

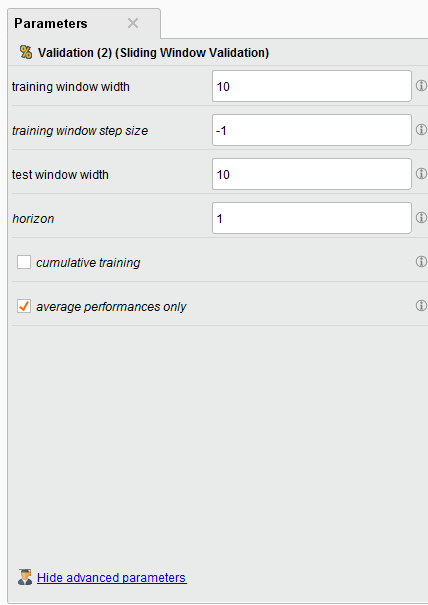

Sliding Validation

The Sliding Window Validation operator is what is used to backtest your time series, it operates differently than a Cross Validation because it creates a “time window” on your data, builds a model, and tests it’s performance before sliding to another time point in your time series.

{{< img src="Sliding-Window-parameters.png" alt="Sliding Window Parameters" >}}

In our example we create a training and testing window width of 10 example rows, our step size is -1 (which is the size of the last testing window), and our horizon is 1. The horizon is how far into the future we want to predict, in this case it’s 1 example row.

There are some other interesting toggle parameters to choose from. The default is average performances only, so your Forecast Trend Accuracy will be your average performance. If you toggle on “cumulative training” then the Sliding Window Validation operator will keep adding the previous window to the training set. This is handy if you want to see if the past time series data might affect your performance going forward BUT it makes training and testing very memory intensive.

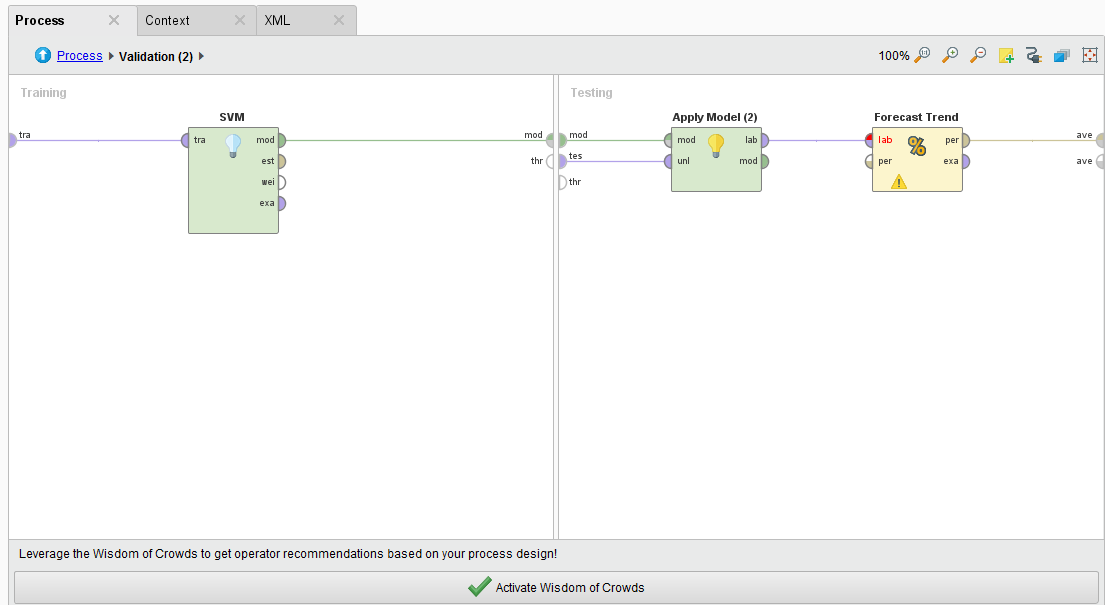

Double-clicking on the Sliding Window Validation operator we see a typical RapidMiner Validation training and testing sides where we can embed our SVM, Apply Model, and Forecasting Performance operators. The Forecasting Performance operator is a special Series Extension operator. You need to use this to forecast the trend on any time series problem.

{{< img src="Sliding-Window-Guts.png" alt="Sliding Window Guts" >}}

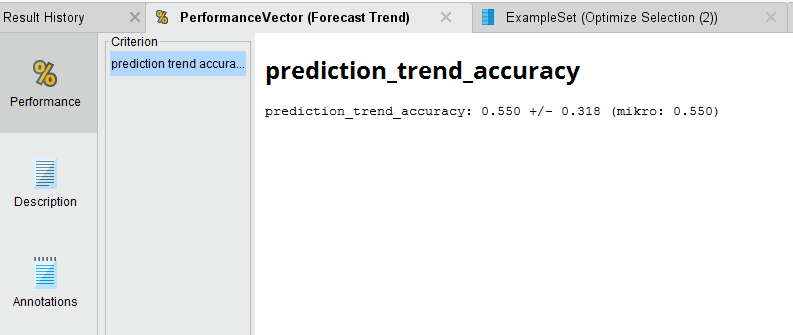

Forecast the Trend

Once we run the process and the analysis completes, we see that we have a 55.5% average accuracy to predict the direction of the trend. Not great, but we can see if we can optimize the SVM parameters of C and gamma to get better performance out of the model.

{{< img src="wForecast-Trend-Accuracy.png" alt="Forecast Trend Accuracy" >}}

In my next lesson I’ll go over how to do Optimization in RapidMiner to better forecast the trend.

Above I introduced the Sliding Window Validation operator to test how well we can forecast a trend in a time series. Our initial results are very poor, we were able to forecast the trend with an average accuracy of 55.5%. This is fractionally better than a simple coin flip! In this updated lesson I will introduce the ability of Parameter Optimization in RapidMiner to see if we can forecast the trend better.

Parameter Optimization

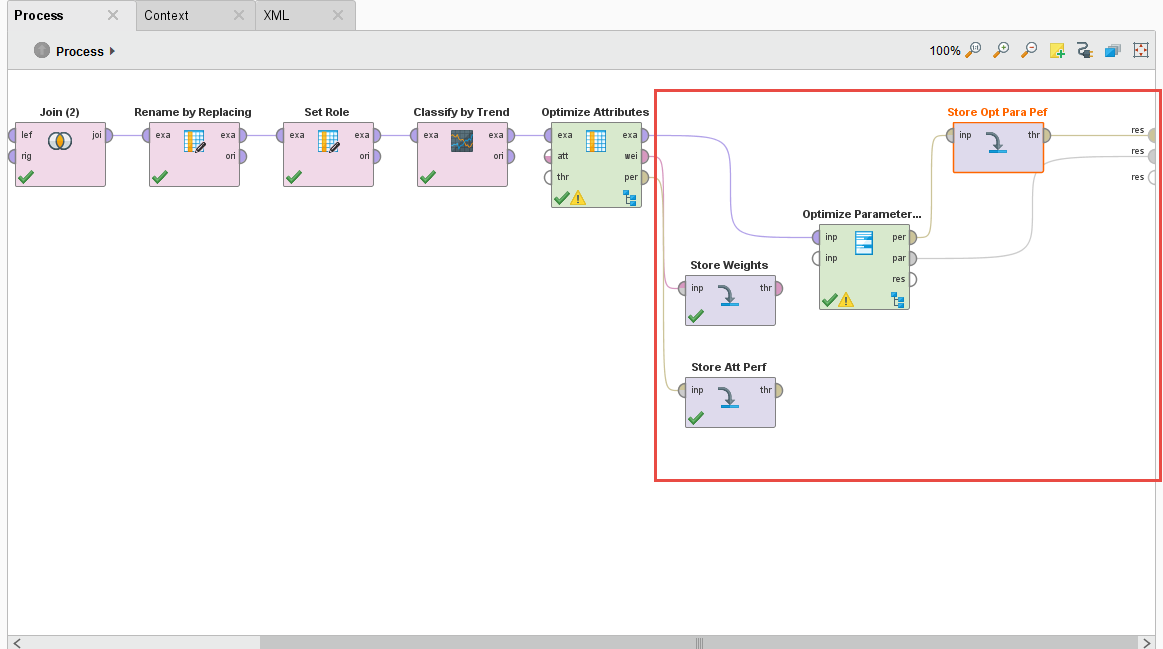

We begin with the same process in Lesson 3 but we introduce a new operator called the Optimize Parameter (Grid) operator. We also do some house cleaning for putting this process into production.

{{< img src="AI4-Process.png" alt="RapidMiner Finance AI model proess" >}}

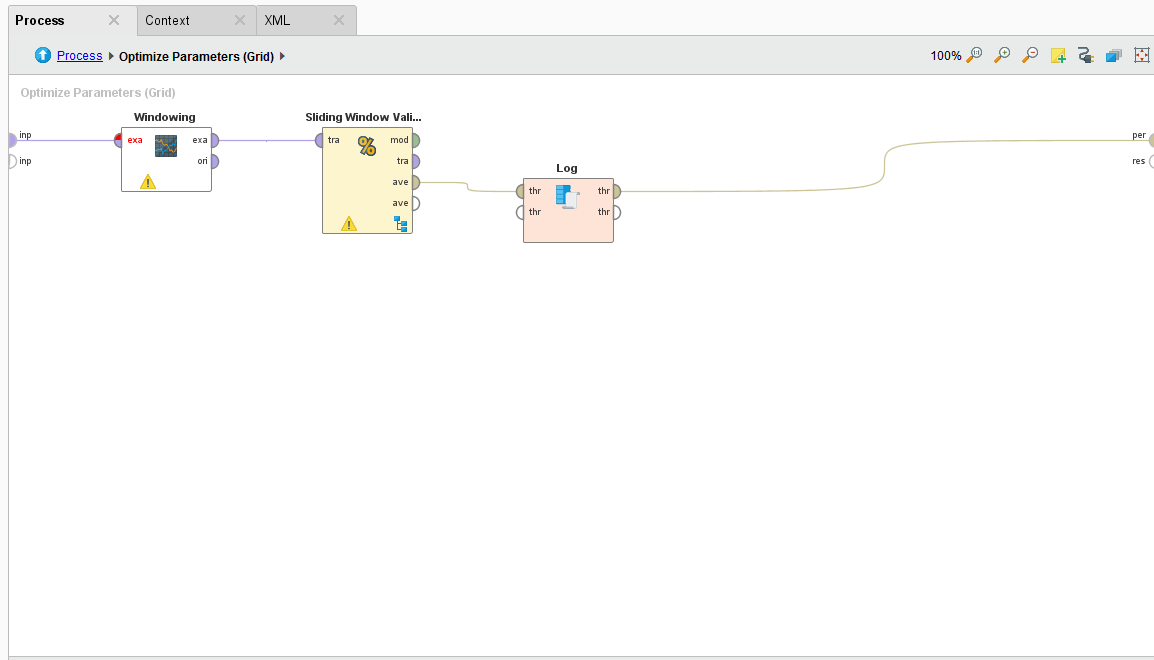

The Optimize Parameter (Grid) operator lets you do some amazing things, it lets you vary – by your predefined limits – parameter values of different operators. Any operator that you put inside this operator’s subprocess can have their parameters automatically iterated over and the overall performance measured. This is a great way to fine-tune and optimize models for your analysis and ultimately for production.

{{< img src="Inside-Optimize-Parameters.png" alt="Inside the Optimize Parameters Option" >}}

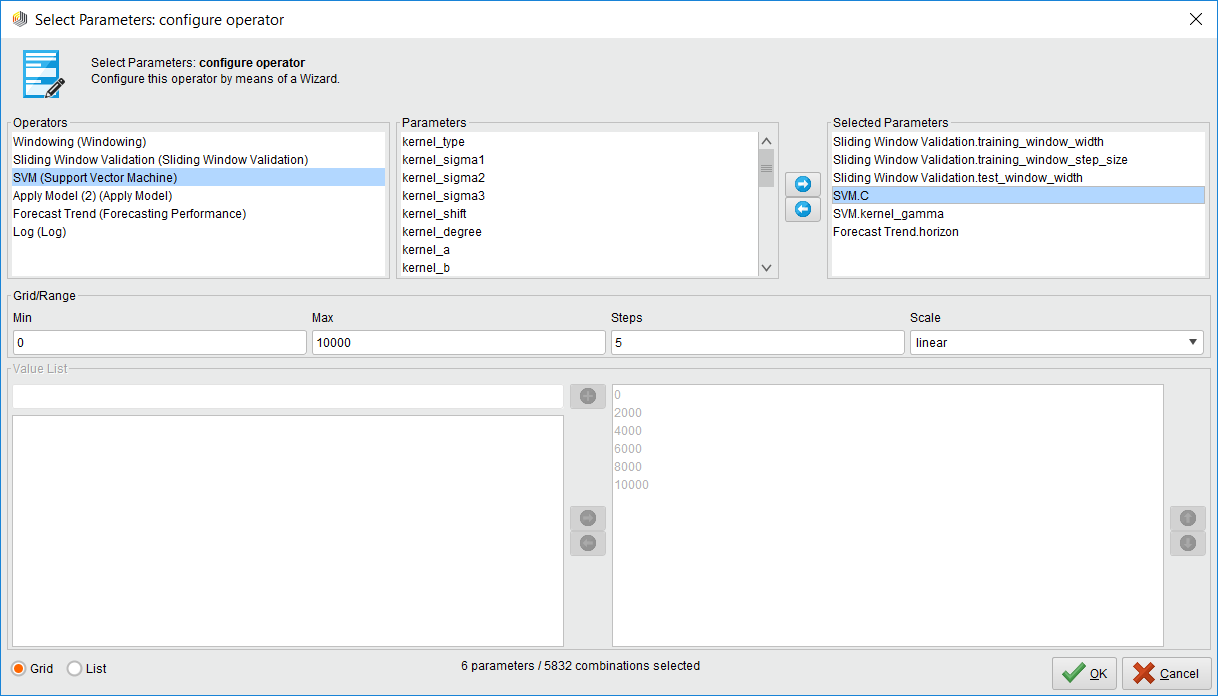

For our process, we want to vary the training window width, testing window width, training step width on the Sliding Window Validation operator, the C and gamma parameters of the SVM machine learning algorithm, and the forecasting horizon on the Forecast Trend Performance operator. We want to test all combinations and ultimately determine the best combination of these parameters that will give us the best-tuned trend prediction.

Note: I run a weekly optimization process for my volatility trend predictions. I’ve noticed depending on market activity, the training width of the Sliding Window Validation operator needs to be tweaked between 8 and 12 weeks.

I also add a few Store operators to save the Performance and Weights of the Optimize Selection operator, and the Performance and Parameter Set of the Optimization Parameter (Grid) operator. We’ll need this data for production.

Varying Parameters Automatically

Whatever operators you put inside the Optimize Parameters (Grid) operator can have their parameters varied automatically, you just have to select which ones and set minimum and maximum values. Just click on the Edit Parameter settings button. Once you do, you are presented with a list of available operators to vary. Select one operator and another list of available parameters is shown. Then select which parameter you want and define min/max values.

{{< img src="Inside-Optimize-Parameters2.png" alt="Inside the Optimize Parameters Option Part 2" >}}

Note: If you select a lot of parameters to vary with a very large max value, you could be optimizing for hours and even days. This operator consumes your computer resources when you have millions of combinations!

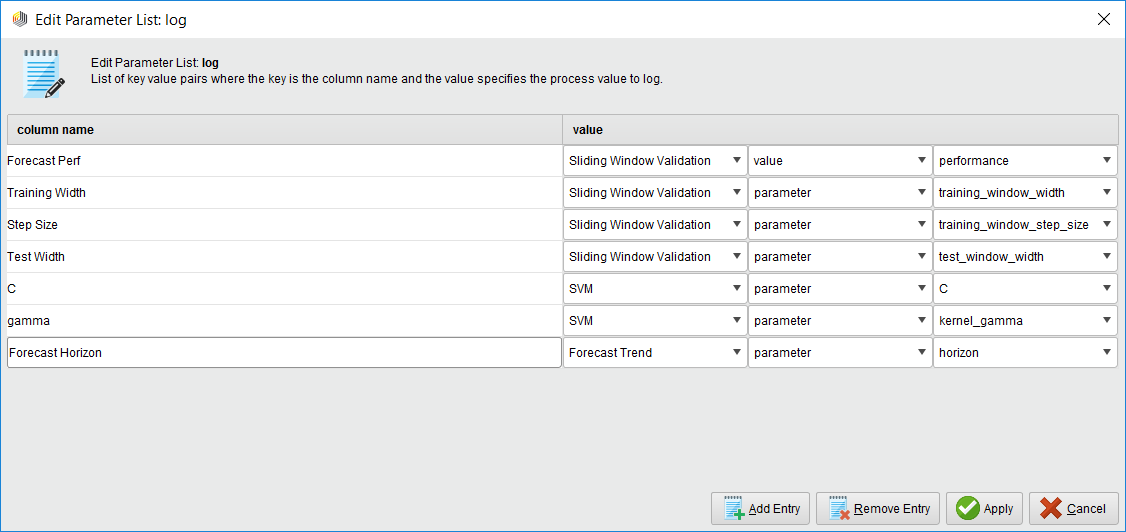

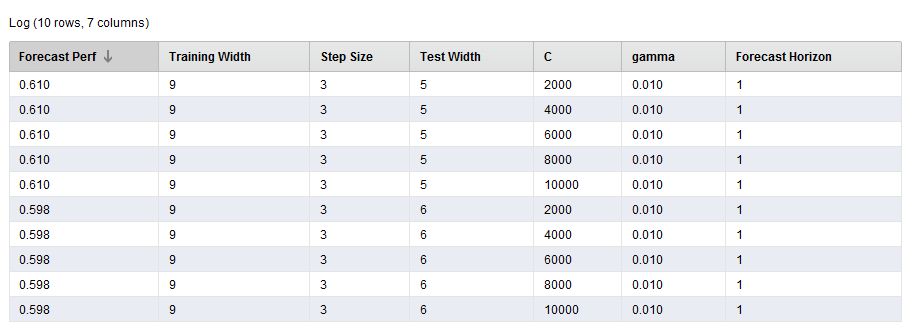

The Log File

The log file is a handy operator that we use in optimization because we can create a custom log file that has the values of the parameters we're measuring and the resulting forecast performance. You just name your column and select which operator and parameter you want to have an entry for.

{{< img src="Log-Operator.png" alt="The handy Log Operator" >}}

Pro Tip: If you want to measure the performance, make sure you select the Sliding Window Validation operator’s performance port and NOT the Forecast Trend Performance operator. Why? Because the Forecast Trend Performance operator generates several models as it slides across the time series. Some performances are better than others. The Sliding Window Validation operator averages all the results together, and that’s the measure you want!

{{< img src="Log-Operator2.png" alt="Log Operator Part 2" >}}

This is a great way of seeing what initial parameter combinations are generating the best performance. It can also be used to visualize your best parameter combinations too!

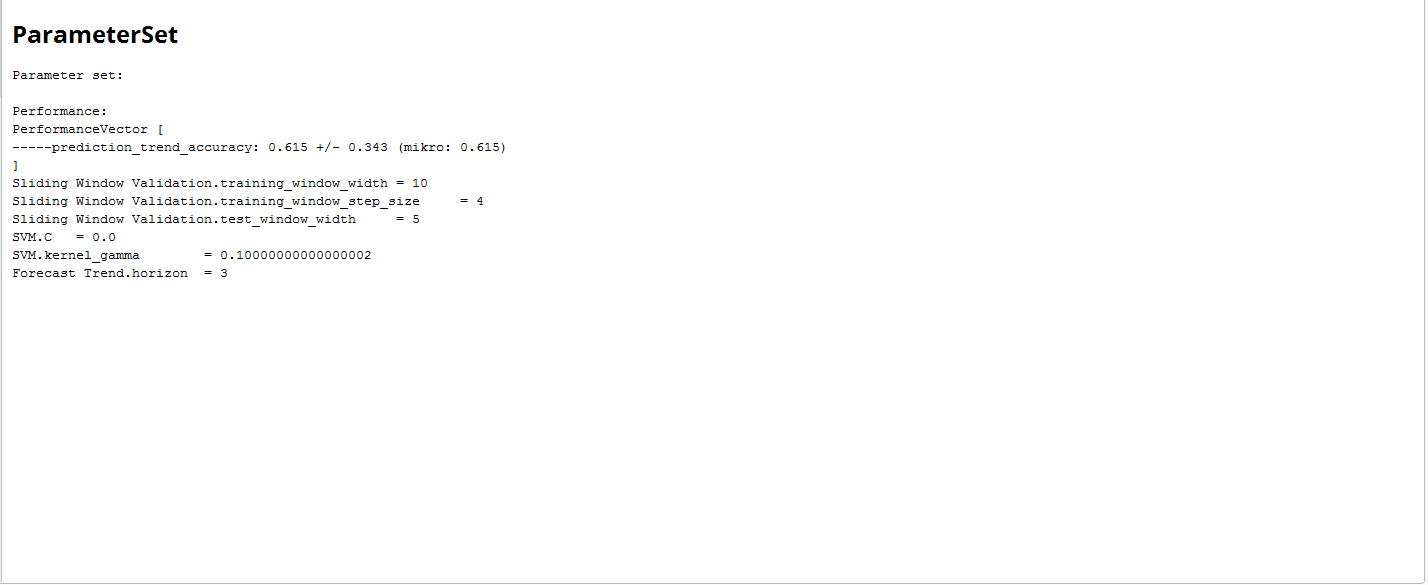

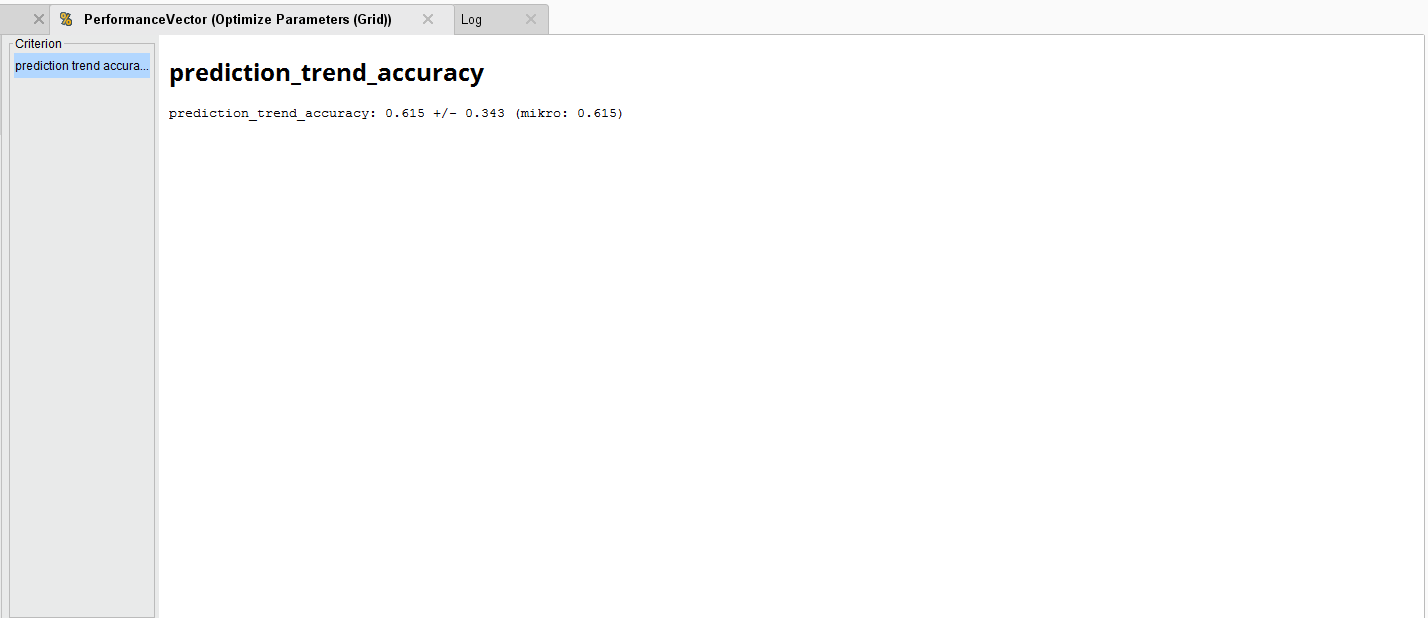

The Results

The results are point to a parameter combination of:

- Training Window Width: 10

- Testing Window Width: 5

- Step Width: 4

- C: 0

- Gamma: 0.1

- Horizon: 3

{{< img src="arameterSet.png" alt="Setting RapidMiner Parameters" >}}

{{< img src="Optimized-Trend-Accuracy.png" alt="Optimizing Trend Accuracy" >}}

To generate an average Forecast Trend accuracy of 61.5%. Compared to the original accuracy, this is an improvement.

Introduction to RapidMiner

After a very long hiatus, I present to my readers my first Rapidminer 5.0 video tutorial. It's just a quick 10 min introduction to the GUI and data import functions of Rapidminer 5.0. You're gonna like the way it looks!

PS: My Youtube Channel is here: Neuralmarkettrends1

PPS: For those who want to follow along, see the original GE.xls file.

This video is for the old open-source RapidMiner 5.0. You can find a copy of it here.

Update to the first video

It's hard to believe that I made these RapidMiner videos over 10 years ago. I find it really cool that I still get a lot of visitors coming to this site to learn RapidMiner, even if these videos are really old and superseded by RapidMiner's own fancy videos now.

So what can I do now for people that come here looking to learn RapidMiner? Well if my SEO tells me anything, people really like to learn about Time Series forecasting for the markets. They want to use RapidMiner because it's a zero to low code environment. After all, that's how I got to RapidMiner in the first place and inspired this blog.

The reality is that over time forecasting and predicting things is hard. You could make a living and build trading models and make money in the markets but it's hard. I recommend doing the passive investing way but if you're coming here to learn about using RapidMiner then I'd suggest you visit RapidMiner's updated videos. RapidMiner now is a platform that has a free option but to process any more than 10,000 rows you have to buy it, and it ain't cheap last time I checked.

If you want to learn time series, forecasting, and even deep learning the market environment then I suggest you skip RapidMiner and dive right into Python. You really need to understand what you are doing with time series and if you want to do a fast 'half-assed' job at learning this, then you'll end up wasting your time and possibly losing money in the markets.

So instead of making new videos, I wanted to append these videos with something more relevant and hopefully start you off on the right foot. I was inspired by this Medium post and I figured it would make more sense to not reinvent the wheel but rather build on the work of others, much like many past readers have done with my videos.

So I offer you my Tutorial Github page. It's where I'll be posting the code I share here on this blog and rebuilding older tutorials, as time permits.

Gold Classification Model in RapidMiner - Part 1

Looks like I'm on a roll! Please see my Rapidminer 5.0 Video Tutorial #2. In this video, we begin the process of recreating my original written NMT YALE/Rapidminer tutorials into version 5.0 and into a video. This video shows how to import training and prediction data, add a classification learner, apply the model, and get the results.

The data files you will need to follow along are the Excel spreadsheets below:

Training data set: gold_final_input - not working

Prediction data set: ga-gold - not working

This video and the next are my complete (NOOB) screw-ups when it comes to applying machine learning to time series. I would skip over these and use this Python Notebook I put together using someone else's great tutorial.

The Python Notebook uses ARIMA and stationarity to analyze a time series and forecast it. ARIMA is not perfect and it doesn't work well in a trend unless you extract the trend's residuals and test them for stationarity. It's all quite complex but better than this model.

Gold Classification Model in RapidMiner - Part 2

In this video I discuss how to use a cross and simple validation operator to split your training data into two sets: training and validation data sets.I also highlight the new intuitive "quick fix" error solution suggestions in Rapidminer 5.0. Enjoy!

Genetic Optimziaton in RapidMiner

In this video, I highlight the data generation capabilities for Rapidminer 5.0 if you want to tinker around, and how to use a Genetic Optimization data pre-processor within a nested experiment. Yes, you read that correctly, a nested experiment.

Preprocessing Data with RapidMiner

In this video we continue where we left off in Video Tutorial #4. We discuss some of the parameters that are available in the Genetic Algorithm data transformers to select the best attributes in the data set. We also replace the first operator with another Genetic Algorithm data transformer that allows us to manipulate population size, and mutation rate, and change the selection schemes (tournament, roulette, etc).

https://www.youtube.com/watch?v=j5vhwbLlZWg

Create a Decision Tree in RapidMiner

Calling all marketers! In this video we discuss how we can use Rapidminer to create a decision tree to help us find "sweet spots" in a particular market segment. This video tutorial uses the Rapidminer direct mail marketing data generator and a split validation operator to build the decision tree.

https://www.youtube.com/watch?v=Vf6G1HNdBoI

Evolutionary Weighting in RapidMIner

In this tutorial, we highlight Rapidminer's weighting operator using an evolutionary approach. We use financial data to preweight inputs before we feed them into a neural network model to try to better classify a gold trend.

https://www.youtube.com/watch?v=fJqOyJ8h8Rs

Financial Time Series Discovery in RapidMiner

In this video we review data discovery techniques for Financial Time Series data by calculating a Simple Moving Average (SMA), creating a non-linear trend line using a Neural Net operator, and creating a time series prediction line using a Neural Net operator.

https://www.youtube.com/watch?v=4ifq4NojbV8

Time Series in RapidMiner - Part 1

In this video we start building a financial time series model, using S&P500 daily OHLCV data, and the windowing, sliding validation, and forecasting performance operator. This Part 1.

Here are the XLS training files.

- [S&P500 Training XLS](/public/2010/GSPC Time Series Training Data.xls)

https://www.youtube.com/watch?v=w0vSSEq2bn0

Time Series in RapidMiner - Part 2

In this video we continue building a financial time series model, using S&P500 daily OHLCV data, and the windowing, sliding validation, and forecasting performance operator. We test the model with some out-of-sample S&P500 data.

Here are the XLS training and out-of-sample files.

- [S&P500 Training XLS](/public/2010/GSPC Time Series Training Data.xls)

- [S&P500 Out of Sample XLS](GSPC Time Series Out of Sample Data.xls)

https://www.youtube.com/watch?v=UmGIGEJMmN8

Pattern Recognition in RapidMiner

I'm back to making new videos again, at least for a little while! This new video showcases the Pattern Recognition & Landmarking plugin that was unveiled at RCOMM 2010.

This plugin is fantastic! It analyzes your data, ranks the best type of learners that should yield the highest accuracy, and then automatically constructs the process for you. It's so great that it helps answer one of the most often-asked questions from my readers, "Which learner should I use for my data?"

https://www.youtube.com/watch?v=7zpKM9Pd-IE

Build Trading Rules with RapidMiner

In this video, I highlight the often overlooked (I know I did) Generate Attribute operator to create trading rules. This operator is not just applicable to financial data but to any data set where you want to transform and create new attributes "on the fly."

No sooner did I post this video than I received a notification of a new post on the Rapid-I blog about this operator? They're going to extend nominal values in the Generate Attribute operator in Rapidminer 5.1.

https://www.youtube.com/watch?v=JNmLIkp4adM

Parameter Optimization in RapidMiner

In this Rapidminer Video Tutorial, I show the user how to use the Parameter Optimization operator to optimize your trained data. The example shows how Rapidminer iterates the learning rate and momentum for a Neural Net Operator to increase the performance of the trained data set.

Video #14 will be about web mining financial text data.

Updated: this tutorial is still valid for RapidMiner versions 5+, 6+, and 7+

https://www.youtube.com/watch?v=R5vPrTLMzng

Web Mining Data in RapidMiner

In this Rapidminer Video Tutorial, I show the user how to use web crawling and text mining operators to download 4 web pages, build a word frequency list, and then check out the similarities between the websites.

Hat tip to Neil at Vancouver.blogspot.com and the Rapid-I team.

https://www.youtube.com/watch?v=OXIKydgGbYk

Advanced ETL using Macros and Loops

In this video, I share some tips and tricks on how to RapidMiner Macros and Loops. At first, these concepts might be a bit tricky but they are WELL WORTH LEARNING! Note my happy emphasis!

https://www.youtube.com/watch?v=2occyfexq6c

Tips and Tricks in RapidMiner

Finally got around to making a few new videos. This one is about using the Union operator and it saved me from a Sunday night of hell. Hope you find this useful!

https://www.youtube.com/watch?v=3hduSkSplHc

Balancing Data in RapidMiner

Posted another tips and tricks video using Rapidminer. This one is about how to balance and sample data from a large data set (10 million rows).

https://www.youtube.com/watch?v=038HyhoZN60

You can download the original CSV file here: CreditCardData.csv << This link is dead. I can't find the XLS.

RapidMiner Livestream 1

https://www.youtube.com/watch?v=0Ctqm-Owm7Q

RapidMiner Livestream 2

https://www.youtube.com/watch?v=0TnDW87nt58

RapidMiner Livestream 3

https://www.youtube.com/watch?v=WdYpWAFxzR8

RapidMiner Livestream 4

https://www.youtube.com/watch?v=fpfvNhWmmQo

WordClouds in RapidMiner Using R

https://www.youtube.com/watch?v=shOxzHFpJ5U

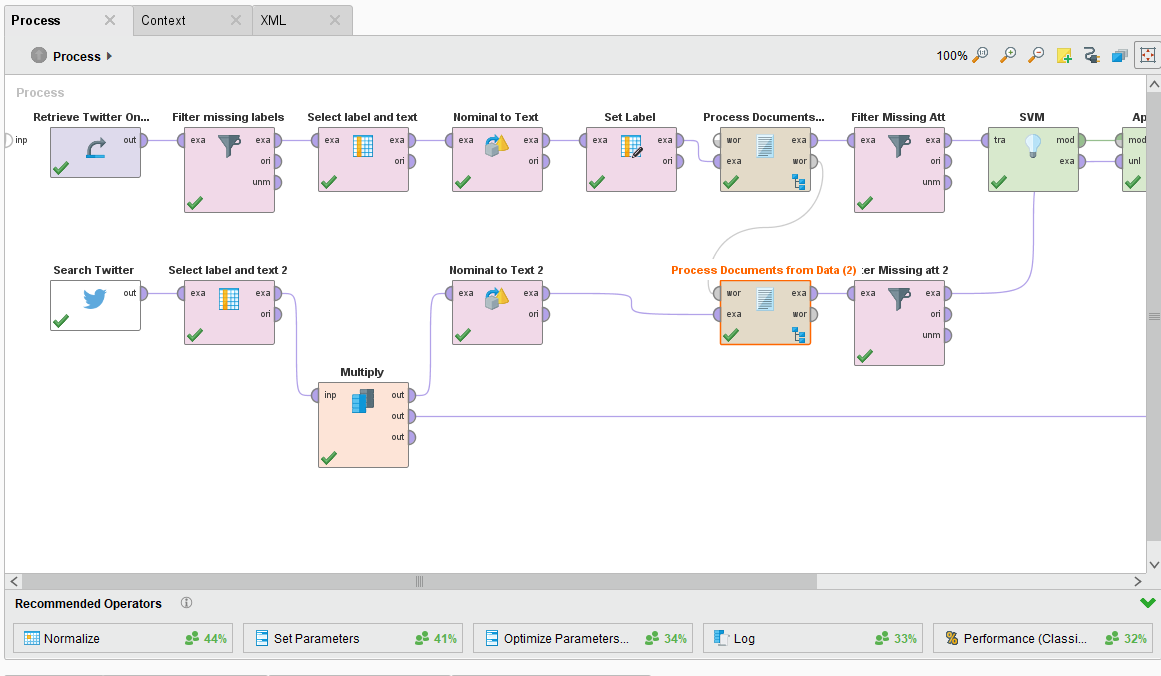

RapidMiner & Python in Production on RapidMiner Server

Continuing my RapidMiner Server series. In this video, I show you how to save a RapidMiner Studio process to RapidMiner Server. Then configure RapidMiner Server and a Job Agent to use Python. The result is a productionalization of a simple auto-posting Twitter process.

https://www.youtube.com/watch?v=N8ShrHBRv2U

Text Mining Federal Reserve Meeting Minutes

I made another video for RapidMiner last week and this time it's about Text Mining Federal Reserve Meeting minutes. In the video, I show how you cluster words and use association rules/item set mining to find interesting word associations. There's some good stuff in those meeting minutes.

https://www.youtube.com/watch?v=kwonnEGFjhY

Parameter Optimization in RapidMiner (no video)

In several of my video tutorials, I assign different parameters for my learning model "on the fly." Of course, the question any astute reader/viewer should ask is, "Why did you choose those parameters instead of another combination?"

Note: Hyperparameter Optimization is a BIG thing in Data Science and Machine Learning. If you're learning Data Science you should pay close attention on the different methods of optimization. They range from grid, random search, bayesian, and others.

That's a great question and the answer is, "Well I just choose those parameters to illustrate my point for the video." While this answer is not at all satisfying to the astute reader/viewer, it does lead us to ask the most important question of all, "what are the right parameters to choose?"

This can be answered very well if you were to use Rapidminer's Parameter Optimization operator in your initial data discovery phase. This operator allows you to choose some or all of the parameters in your experiment and iterate different values for them to meet some specific requirement on your part (i.e. performance).

For example, if you were using the Neural Net operator and didn't know what to set your learning and momentum parameters to, to get the best classification accuracy, you would use the Parameter Optimization operator to integrate different combinations of those parameters to find the best accuracy.

Once the Parameter Optimization operator determines those values, you can input them into your experiment and truly optimize your model for performance! See below for an actual output from a parameter optimization model I'm working on. You can see that Rapidminer indicated that a momentum of 0.3 and a learning rate of 0.6 was the best parameter settings to maximize the accuracy rate and minimize the classification error.

While is operator is a fantastic feature (they got evolutionary optimizers too!) for us data modelers, it's a massive computer resource suck. I would advise anyone using this operator to have a very powerful server or computer, with oodles of memory, to run your iterations.

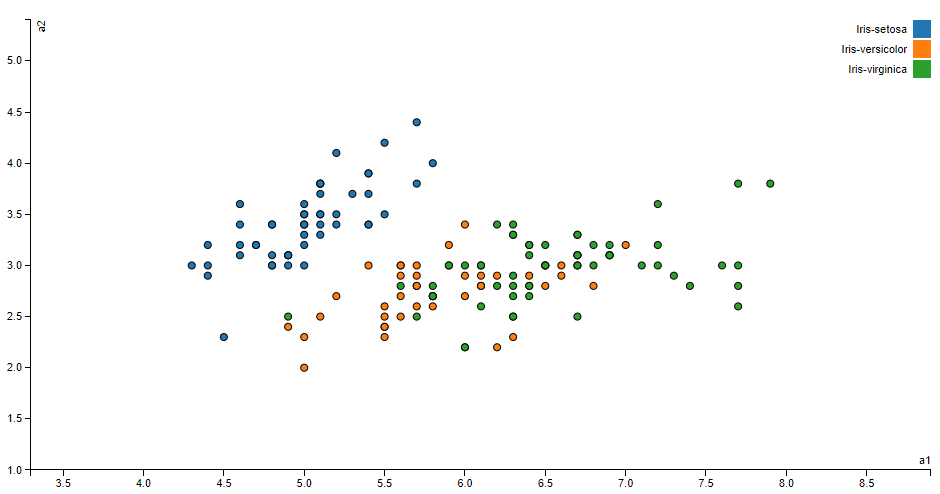

Sample Parameter Optimization Example in RapidMiner (no video)

Below is a simple parameter optimization process in Rapidminer using the Iris data set. Download the TXT file and import it into Rapidminer. Of course, you may use whatever data set you want and switch out the learner. Make sure to update the parameter optimization operator parameters. :)

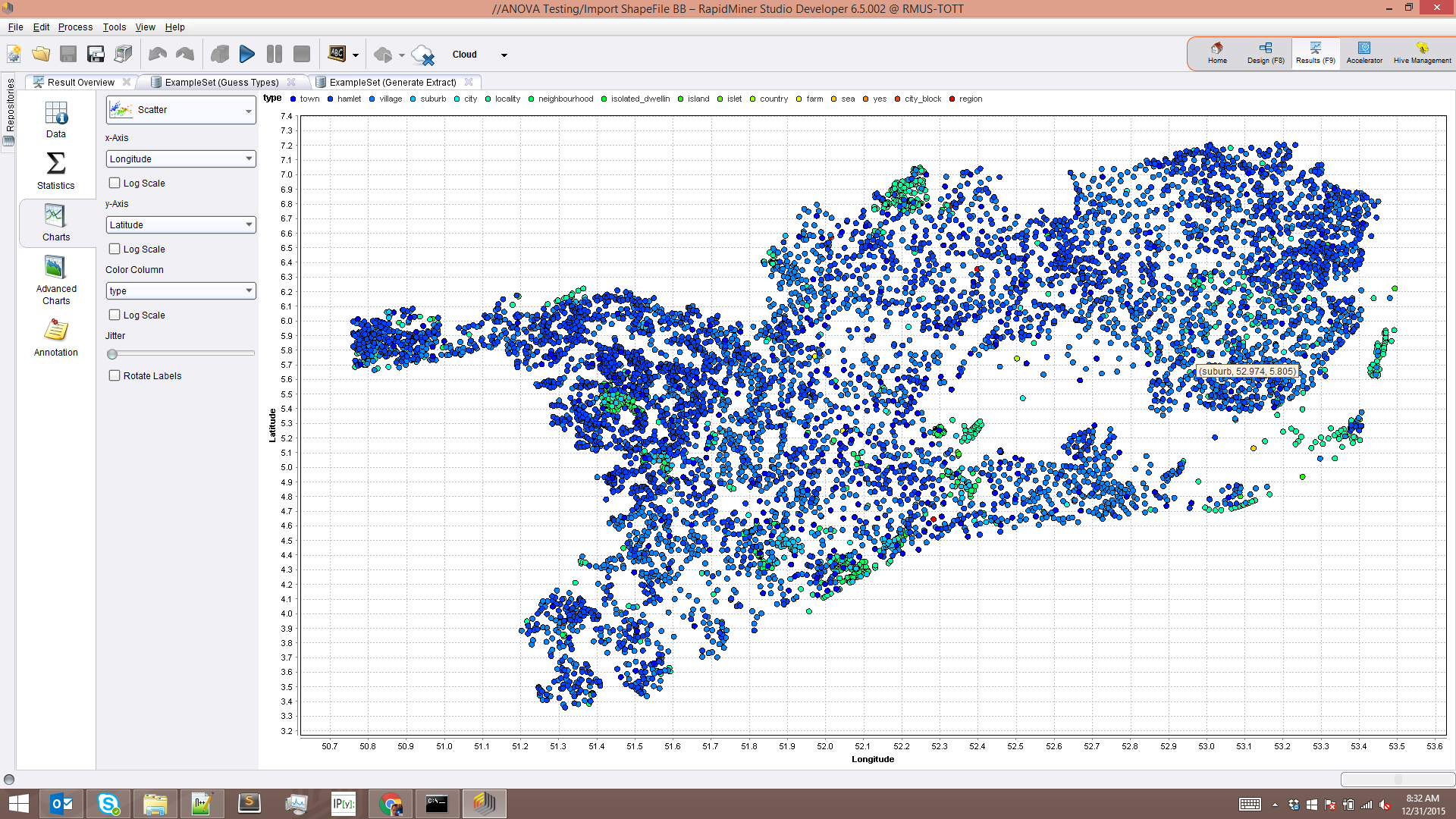

Extending GIS Data in RapidMiner (no video)

My talented coworker and GIS expert, Balázs Bárány, made some amazing progress on extending GIS in RapidMiner. Using Groovy Script, and the Execute Script operator in RapidMiner, he was able to import a GIS shapefile.

I find this incredibly cool because as a former Civil Engineer, I spent many hours extracting traverses from survey points (i.e. latitude and longitude), calculating drainage areas from polygons, and using environmental-related data for reports.

To show you want can be done, I downloaded GIS information for the Netherlands from GeoFabrik to test this out.

{{< img src="NLShapefile.png" alt="The Netherlands as a GIS ShapeFile" >}}

Just follow his write-up on installing the GeoScript Java libraries and modifying your RapidMiner Studio startup script. If you need the new Groovy Script Jar file, you can get it here.

RapidMiner Text Mining Resources

Just some Text Mining resources in RapidMiner that I found cool, helpful, and interesting. This list will be updated as I find more links.

Using NLTK and Text Blob Python packages

Fix spelling mistakes using Text Blog Python package

Splitting text into sentences inside RapidMiner

Building a dictionary-based sentiment model in RapidMiner

Text processing customer reviews using the Aylien extension

Fix Spelling Mistakes with Python and RapidMiner (no video)

The RapidMiner Community has some really talented Data Scientists. I recently came across a response by Unicorn lionelderkrikor on how to fix general spelling errors using a bit of Python and RapidMiner.

The goal here was to correct things like "verrry goood!" to "very good!", or "yah!" to "yes!" Typical annoying text-processing tasks that every data scientist needs to do time and time again.

RapidMiner was used to do the heavy text processing and Lionel used the Python Textblob library to write two simple functions that corrected the majority of mistakes.

Note, I said majority. In some cases if you wrote 'verrrrrrrrrrrrrrrrrrrrrrrrrrrrrryyyyyyyyyyyyyyyyyy goooooooooooooooooooooooooddddddddddddd', the Textblob library couldn't figure it out, and I completely understand it. If you wrote that above in a Tweet, I'd take away your smartphone and spank you with it.

Check out the Community post and grab Lionel's XML to play with it yourself.

Rest API's & RapidMiner for Text Mining

https://www.youtube.com/watch?v=jQ389ILWw6A

- Online is becoming the 'goto' place for customers to interact with a company

- The number 1 reason to access chat is to get a quick answer in an emergency

- RapidMiner uses the Drift API and help user to navigate to the answer

- Retrieves online chat from REST API > Text Processes conversation > Categorizes via LDA > pushes to RapidMiner Server

- Need extensions: Text Processing / Operator Toolbox / Web Mining

- APIs respond in JSON Arrays

- Use Online JSON Viewer to Pretty Print responses

- Store auth tokens as a macro in RapidMiner

- Get Page is not a REST API tool, it just queries pages on the internet BUT it has some handy abilities

- Get Pages > JSON to Data operator.

- Get the JSON array from Get Pages and convert it via JSON to Data operator

Using the Multiply Data Operator in RapidMiner (no video)

I often use the Multiply operator to make copies of my data set and feed it into different learners. I do this because sometimes I don't know if a Neural Net operator, or an SVM operator, gives me better performance. Once I know which operator performs my task better, I then use the parameter optimization process to see if I can squeeze more accuracy out it.

The sampling process below uses the Iris data set, just switch it out with your data set and enjoy.

Striping carriage returns, spaces, and tabs using Python and RapidMiner (code)

I've been working on some Python code for a RapidMiner process. What I want to do is simplify my Instagram Hashtag Tool and make it go faster.

Part of that work is extracting Instagram comments for text processing. I ran into utter hell trying to export those comments into a CSV file that RapidMiner could read. It was exporting the data just fine but wrapping the comment into carriage returns. For some strange reason, RapidMiner can not read carriage returned data in a cell. It can only read the first line. Luckily with the help of some users, I managed to work around and find a solution on my end. DO all the carriage return striping on my end before export.

The trick is to strip all carriage returns, spaces, tabs, etc using the regular expression 's', then replace the stripped items with a space like this ' ' in place. While this isn't elegant, it had to be done because Instagram comments are so messy to begin with.

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import pandas as pd

import json

from pprint import pprint

import csv

import re

df = pd.read_json('https://www.instagram.com/explore/tags/film/?__a=1')

name = df['graphql']['hashtag']['name']

hashtag_count = df['graphql']['hashtag']['edge_hashtag_to_media']['count']

count_list = []

likes = df['graphql']['hashtag']['edge_hashtag_to_media']['edges']

for i in likes:

add = (df['graphql']['hashtag']['name'], df['graphql']['hashtag']['edge_hashtag_to_media']['count'], i['node']['id'], i['node']['edge_liked_by']['count'], i['node']['display_url'])

count_list.append(add)

print(count_list)

count_out = pd.DataFrame(count_list)

count_out.columns = ['hashtag', 'count', 'user_id', 'likes', 'image_url']

# This just exports out a CSV with the above data. Plan is to use this in a RM process

count_out.to_csv('out.csv')

# Now comes the hard part, preparing the comments for a RM Process.

# This is where the carriage returns killed me for hours

text_export = []

rawtext = df['graphql']['hashtag']['edge_hashtag_to_media']['edges']

for i in rawtext:

rawtext = i['node']['edge_media_to_caption']['edges']

#print(rawtext)

for j in rawtext:

final_text = j['node']['text']

df['final_text'] = j['node']['text']

text_export.append(final_text)

print(df['final_text'])

text_out = pd.DataFrame(text_export)

#This is the key, I had to strip everything using s and replacing it with a space via ' '

text_out.replace(r's',' ', regex=True, inplace=True)

text_out.columns = ['comment']

text_out.to_csv('out2.csv', line_terminator='rn')

Word clouds in RapidMiner and R

There was a question from the RapidMiner Community on how to make word clouds in RapidMiner using R. It's really easy.

{{< youtube shOxzHFpJ5U >}}

First, you'll need to make sure you have the Execute R extension installed and configured, then you need to download the "wordcloud" and "RColorBrewer" packages from R Cran Repository.

Finally, grab this sample XML and pop it in your RapidMiner Studio. Note: I saved the image to my desktop, you'll have to repath it to where you want to save the PNG.

<?xml version="1.0" encoding="UTF-8"?><process version="8.2.001">

<context>

<input/>

<output/>

<macros/>

</context>

<operator activated="true" class="process" compatibility="8.2.001" expanded="true" name="Process">

<process expanded="true">

<operator activated="true" class="social_media:search_twitter" compatibility="8.1.000" expanded="true" height="68" name="Search Twitter" width="90" x="45" y="34">

<parameter key="connection" value="Twitter"/>

<parameter key="query" value="rapidminer"/>

</operator>

<operator activated="true" class="select_attributes" compatibility="8.2.001" expanded="true" height="82" name="Select Attributes" width="90" x="179" y="34">

<parameter key="attribute_filter_type" value="single"/>

<parameter key="attribute" value="Text"/>

</operator>

<operator activated="true" class="nominal_to_text" compatibility="8.2.001" expanded="true" height="82" name="Nominal to Text" width="90" x="313" y="34">

<parameter key="attribute_filter_type" value="single"/>

<parameter key="attribute" value="Text"/>

</operator>

<operator activated="true" class="text:process_document_from_data" compatibility="8.1.000" expanded="true" height="82" name="Process Documents from Data" width="90" x="447" y="34">

<parameter key="prune_method" value="percentual"/>

<list key="specify_weights"/>

<process expanded="true">

<operator activated="true" class="text:tokenize" compatibility="8.1.000" expanded="true" height="68" name="Tokenize" width="90" x="112" y="34"/>

<connect from_port="document" to_op="Tokenize" to_port="document"/>

<connect from_op="Tokenize" from_port="document" to_port="document 1"/>

<portSpacing port="source_document" spacing="0"/>

<portSpacing port="sink_document 1" spacing="0"/>

<portSpacing port="sink_document 2" spacing="0"/>

</process>

</operator>

<operator activated="true" class="text:wordlist_to_data" compatibility="8.1.000" expanded="true" height="82" name="WordList to Data" width="90" x="581" y="85"/>

<operator activated="true" class="select_attributes" compatibility="8.2.001" expanded="true" height="82" name="Select Attributes (2)" width="90" x="715" y="85">

<parameter key="attribute_filter_type" value="subset"/>

<parameter key="attributes" value="word|total"/>

</operator>

<operator activated="true" class="r_scripting:execute_r" compatibility="8.1.000" expanded="true" height="82" name="Execute R" width="90" x="849" y="85">

<parameter key="script" value="# rm_main is a mandatory function, # the number of arguments has to be the number of input ports (can be none) rm_main = function(data) { 	library("wordcloud") 	library("RColorBrewer") 	 	png("C:\Users\TomOtt\Desktop\wordcloud.png", width=1280,height=800) 	wordcloud(words = data$word, freq = data$total, min.freq = 1, max.words=200, random.order=FALSE, rot.per=0.35, colors=brewer.pal(8, "Dark2")) 	dev.off() 	 	return (data) } "/>

</operator>

<connect from_op="Search Twitter" from_port="output" to_op="Select Attributes" to_port="example set input"/>

<connect from_op="Select Attributes" from_port="example set output" to_op="Nominal to Text" to_port="example set input"/>

<connect from_op="Nominal to Text" from_port="example set output" to_op="Process Documents from Data" to_port="example set"/>

<connect from_op="Process Documents from Data" from_port="word list" to_op="WordList to Data" to_port="word list"/>

<connect from_op="WordList to Data" from_port="example set" to_op="Select Attributes (2)" to_port="example set input"/>

<connect from_op="Select Attributes (2)" from_port="example set output" to_op="Execute R" to_port="input 1"/>

<connect from_op="Execute R" from_port="output 1" to_port="result 1"/>

<portSpacing port="source_input 1" spacing="0"/>

<portSpacing port="sink_result 1" spacing="0"/>

<portSpacing port="sink_result 2" spacing="0"/>

</process>

</operator>

</process>

Installing RapidMiner on AWS

or a project I had to spin up an EC2 instance and install RapidMiner Studio there. I needed a static IP to make some connections to a database across the ocean and figured AWS was the easiest route. It turned out not to be that easy.

I ended up having to install Java 8, RapidMiner Studio, X11 Windows, and XMING server, and use Putty to bring it all together. The end result, I had to port forward X11 to my Windows Laptop. It works but it's a bit complex and slow.

{{< img src="RMX11.png" alt="RapidMiner and X11" >}}

Spin up an AWS instance

First you have to spin up an instance on AWS. I chose a bare-bones Linux AMI and installed Java 8 on it. You don't need to select a static IP but make sure to create the appropriate security group and enable port 22 in your security group or else Putty won't work.

Then install RapidMiner Studio. I used the latest (version 8.2) Linux version and downloaded it using the 'wget' command. I saved it into a folder called 'work'. You can save it anywhere but make sure to unzip it. It will create a RapidMiner-Studio folder.

Connect via Putty

I won't get into details here on how to connect Putty to AWS but here is a great tutorial from Amazon.

Connect and then install the X11 libraries. I found out how to do it here. Also, you'll have to enable X11 port forwarding in Putty, see the article on how to toggle it on.

Then logoff.

Install XMING Server

You're going to need XMING Server if you're coming from Windows. You can get it here. Install it and launch it.

Connect to your AWS instance

Using Putty, connect back to AWS instance. Once there, test your XMING and X11 installation by typing 'xeyes'. If everything is installed correctly, you should get a window pop-up with googly eyes.

Then navigate to where you install RapidMiner Studio, find the RapidMiner-Studio.sh file and execute it doing './RapidMiner-Studio.sh'

If everything is correct, RapidMiner Studio should pop up like the image above.

Done. Put a fork in me.

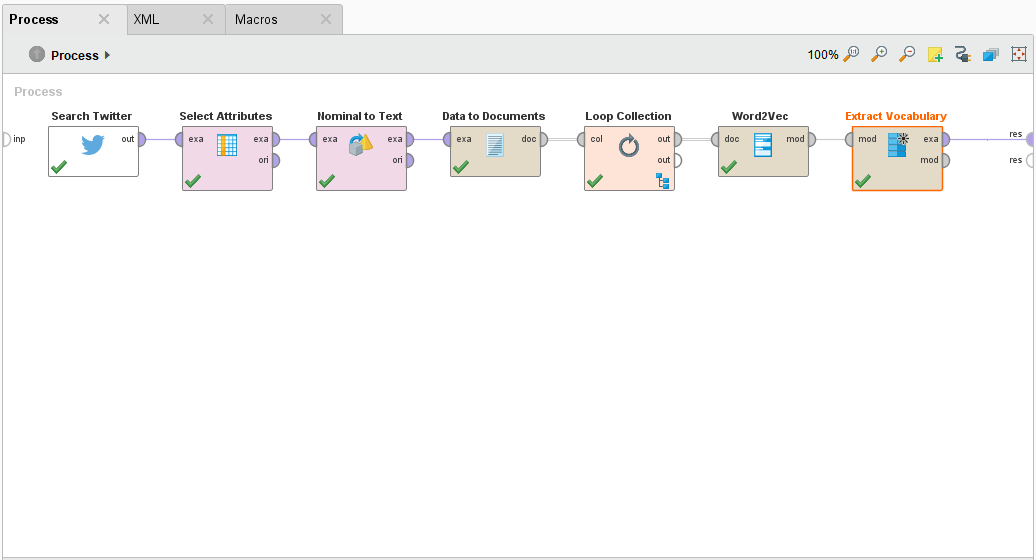

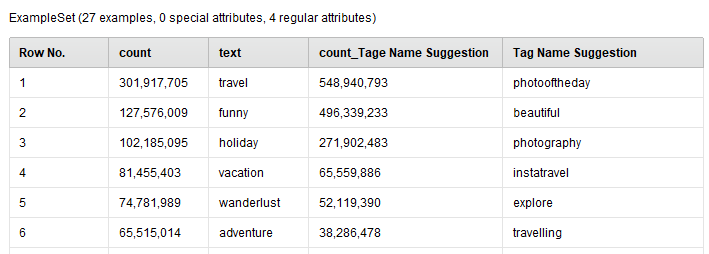

Word to Vec (Word2Vec) in RapidMiner

This is an example process of how to use Word2Vec in RapidMiner with the Search Twitter operator. For more information check out this post on the community.

I'll be going over this in a bit more detail at my next live stream here.

{{< img src="Word2Vec.png" alt="Word2Vec RapidMiner" >}}

<?xml version="1.0" encoding="UTF-8"?><process version="8.1.001">

<context>

<input/>

<output/>

<macros/>

</context>

<operator activated="true" class="process" compatibility="8.1.001" expanded="true" name="Process">

<process expanded="true">

<operator activated="true" class="social_media:search_twitter" compatibility="8.1.000" expanded="true" height="68" name="Search Twitter" width="90" x="45" y="34">

<parameter key="connection" value="Twitter - Studio Connection"/>

<parameter key="query" value="rapidminer"/>

<parameter key="locale" value="en"/>

</operator>

<operator activated="true" class="select_attributes" compatibility="8.1.001" expanded="true" height="82" name="Select Attributes" width="90" x="45" y="136">

<parameter key="attribute_filter_type" value="single"/>

<parameter key="attribute" value="Text"/>

<parameter key="include_special_attributes" value="true"/>

</operator>

<operator activated="true" class="nominal_to_text" compatibility="8.1.001" expanded="true" height="82" name="Nominal to Text" width="90" x="45" y="238"/>

<operator activated="true" class="text:data_to_documents" compatibility="8.1.000" expanded="true" height="68" name="Data to Documents" width="90" x="246" y="34">

<list key="specify_weights"/>

</operator>

<operator activated="true" class="loop_collection" compatibility="8.1.001" expanded="true" height="82" name="Loop Collection" width="90" x="246" y="136">

<process expanded="true">

<operator activated="true" class="text:transform_cases" compatibility="8.1.000" expanded="true" height="68" name="Transform Cases" width="90" x="112" y="34"/>

<operator activated="true" class="text:tokenize" compatibility="8.1.000" expanded="true" height="68" name="Tokenize" width="90" x="581" y="34"/>

<connect from_port="single" to_op="Transform Cases" to_port="document"/>

<connect from_op="Transform Cases" from_port="document" to_op="Tokenize" to_port="document"/>

<connect from_op="Tokenize" from_port="document" to_port="output 1"/>

<portSpacing port="source_single" spacing="0"/>

<portSpacing port="sink_output 1" spacing="0"/>

<portSpacing port="sink_output 2" spacing="0"/>

</process>

</operator>

<operator activated="true" class="word2vec:Word2Vec_Learner" compatibility="1.0.000" expanded="true" height="68" name="Word2Vec " width="90" x="447" y="34"/>

<operator activated="true" class="word2vec:Get_Vocabulary" compatibility="1.0.000" expanded="true" height="82" name="Extract Vocabulary" width="90" x="581" y="34">

<parameter key="Get Full Vocabulary" value="true"/>

<parameter key="Take Random Words" value="false"/>

<parameter key="Number of Words to Pull" value="10"/>

</operator>

<connect from_op="Search Twitter" from_port="output" to_op="Select Attributes" to_port="example set input"/>

<connect from_op="Select Attributes" from_port="example set output" to_op="Nominal to Text" to_port="example set input"/>

<connect from_op="Nominal to Text" from_port="example set output" to_op="Data to Documents" to_port="example set"/>

<connect from_op="Data to Documents" from_port="documents" to_op="Loop Collection" to_port="collection"/>

<connect from_op="Loop Collection" from_port="output 1" to_op="Word2Vec " to_port="doc"/>

<connect from_op="Word2Vec " from_port="mod" to_op="Extract Vocabulary" to_port="mod"/>

<connect from_op="Extract Vocabulary" from_port="exa" to_port="result 1"/>

<portSpacing port="source_input 1" spacing="0"/>

<portSpacing port="sink_result 1" spacing="0"/>

<portSpacing port="sink_result 2" spacing="0"/>

</process>

</operator>

</process>

Extract OpenStreetMap Data in RapidMiner

A few weeks ago I wanted to play with the Enrich by Webservice operator. The operator is part of the RapidMiner Web Mining extension and is accessible through the Marketplace. I wanted to do reverse lookups based on latitude and longitude. In my searching, I came across this post on how to do it using XPath and Google. That post was most informative and I used it as a starting point for my process building. I wanted to do the same thing but use OpenStreetMaps. Why OSM? OSM is an open-source database of Geographic Information Systems (GIS) and is rich with data. Plus, it's a bit easier to use than Google.

After a few minutes of tinkering, I was successful. I built a process to go out to the USGS Earthquake site, grab the current CSV, load it, and then do a reverse lookup using the latitude and longitude. The process then creates a column with the country via the XPath of "//reversegeocode/addressparts/country/text()."

Here's what the process looks like:

Here's the XML of the process:

<?xml version="1.0" encoding="UTF-8"?><process version="7.6.001">

<context>

<input/>

<output/>

<macros/>

</context>

<operator activated="true" class="process" compatibility="7.6.001" expanded="true" name="Process">

<process expanded="true">

<operator activated="true" class="open_file" compatibility="7.6.001" expanded="true" height="68" name="Open File" width="90" x="45" y="30">

<parameter key="resource_type" value="URL"/>

<parameter key="filename" value="https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/2.5_day.csv"/>

<parameter key="url" value="https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/2.5_day.csv"/>

<description align="center" color="transparent" colored="false" width="126">Open USGS URL</description>

</operator>

<operator activated="true" class="read_csv" compatibility="7.6.001" expanded="true" height="68" name="Read CSV" width="90" x="179" y="30">

<parameter key="column_separators" value=","/>

<list key="annotations"/>

<list key="data_set_meta_data_information"/>

<description align="center" color="transparent" colored="false" width="126">Read CSV data</description>

</operator>

<operator activated="true" class="select_attributes" compatibility="7.6.001" expanded="true" height="82" name="Select Attributes" width="90" x="380" y="30">

<parameter key="attribute_filter_type" value="subset"/>

<parameter key="attributes" value="latitude|longitude|time|mag"/>

<description align="center" color="transparent" colored="false" width="126">Select Columns</description>

</operator>

<operator activated="true" class="rename" compatibility="7.6.001" expanded="true" height="82" name="Rename" width="90" x="514" y="30">

<parameter key="old_name" value="latitude"/>

<parameter key="new_name" value="Latitude"/>

<list key="rename_additional_attributes">

<parameter key="longitude" value="Longitude"/>

</list>

<description align="center" color="transparent" colored="false" width="126">Rename Columns</description>

</operator>

<operator activated="true" class="web:enrich_data_by_webservice" compatibility="7.3.000" expanded="true" height="68" name="Enrich Data by Webservice" width="90" x="648" y="30">

<parameter key="query_type" value="XPath"/>

<list key="string_machting_queries"/>

<list key="regular_expression_queries"/>

<list key="regular_region_queries"/>

<list key="xpath_queries">

<parameter key="ExtractedCountry" value="//reversegeocode/addressparts/country/text()"/>

</list>

<list key="namespaces"/>

<parameter key="assume_html" value="false"/>

<list key="index_queries"/>

<list key="jsonpath_queries"/>

<parameter key="url" value="https://nominatim.openstreetmap.org/reverse?format=xml&lat=<%Latitude%>&lon=<%Longitude%>&zoom=18&addressdetails=1"/>

<list key="request_properties"/>

<description align="center" color="transparent" colored="false" width="126">Extract Country based on Lat/Long</description>

</operator>

<connect from_op="Open File" from_port="file" to_op="Read CSV" to_port="file"/>

<connect from_op="Read CSV" from_port="output" to_op="Select Attributes" to_port="example set input"/>

<connect from_op="Select Attributes" from_port="example set output" to_op="Rename" to_port="example set input"/>

<connect from_op="Rename" from_port="example set output" to_op="Enrich Data by Webservice" to_port="Example Set"/>

<connect from_op="Enrich Data by Webservice" from_port="ExampleSet" to_port="result 1"/>

<portSpacing port="source_input 1" spacing="0"/>

<portSpacing port="sink_result 1" spacing="0"/>

<portSpacing port="sink_result 2" spacing="0"/>

</process>

</operator>

</process>

Note: Updated RapidMiner XML with new USGS URL on 2017-09-07

Calculating GeoDistance in RapidMiner with Python

I showed how you can use the Enrich by Webservice operator and OpenStreetMaps to do reverse geocoding lookups. This post will show how to calculate Geospatial distances between two latitude and longitude points. First using a RapidMiner and then using the GeoPy Python module.

This was fun because it touched on my civil engineering classes. I used to calculate distances from latitude and longitude in my land surveying classes.

My first step was to select a "home" location, which was 1 Penn Plaza, NY NY. Then I downloaded the latest list of earthquakes from the USGS website. The last step was to calculate the distance from home to each earthquake location.

The biggest time suck for me was building all the formulas in RapidMiner's Generate Attribute (GA) operator. That took about 15 minutes. Then I had to backcheck the calculations with a website to make sure they matched. RapidMiner excelled in the speed of building and analyzing this process but I did notice the results were a bit off from the GeoPy python process.

There was a variance of about +/- 4km in each distance. This is because I hardcoded in the earth's diameter as 6371000 km for the RapidMiner process, but the diameter of the Earth changes based on your location. This is because the earth isn't a sphere but more of an ellipsoid and the diameter isn't uniform. The GeoPy great_circle calculation accounts for this by adjusting the calculation.

For a proof of concept, both work just fine.

There were a few snags in my Python code that took me longer to finish and I chalk this up to my novice ability at writing Python. I didn't realize that I had to create a tuple out of the lat/long columns and then use a for loop to iterate over the entire tuple list. But this was something that my friend solved in 5 minutes. Otherwise than that, the Python code works well.

Here's the XML of the process:

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<process version="6.5.002">

<context>

<input/>

<output/>

<macros/>

</context>

<operator activated="true" class="process" compatibility="6.5.002" expanded="true" name="Process">

<process expanded="true">

<operator activated="true" class="open_file" compatibility="6.5.002" expanded="true" height="60" name="Open File" width="90" x="45" y="30">

<parameter key="resource_type" value="URL"/>

<parameter key="url" value="https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/2.5_day.csv"/>

<description align="center" color="transparent" colored="false" width="126">Open Earthquake USGS URL</description>

</operator>

<operator activated="true" class="read_csv" compatibility="6.5.002" expanded="true" height="60" name="Read CSV" width="90" x="179" y="30">

<parameter key="column_separators" value=","/>

<list key="annotations"/>

<list key="data_set_meta_data_information"/>

<description align="center" color="transparent" colored="false" width="126">Read CSV file</description>

</operator>

<operator activated="true" class="select_attributes" compatibility="6.5.002" expanded="true" height="76" name="Select Attributes" width="90" x="313" y="30">

<parameter key="attribute_filter_type" value="subset"/>

<parameter key="attributes" value="latitude|longitude|mag"/>

<description align="center" color="transparent" colored="false" width="126">Select Magnitude, Lat, and Long</description>

</operator>

<operator activated="true" class="filter_examples" compatibility="6.5.002" expanded="true" height="94" name="Filter Examples" width="90" x="447" y="30">

<list key="filters_list">

<parameter key="filters_entry_key" value="mag.gt.4"/>

</list>

<description align="center" color="transparent" colored="false" width="126">Filter for quakes &gt; mag 4</description>

</operator>

<operator activated="true" class="rename" compatibility="6.5.002" expanded="true" height="76" name="Rename" width="90" x="581" y="30">

<parameter key="old_name" value="latitude"/>

<parameter key="new_name" value="Latitude"/>

<list key="rename_additional_attributes">

<parameter key="longitude" value="Longitude"/>

</list>

<description align="center" color="transparent" colored="false" width="126">Rename columns</description>

</operator>

<operator activated="true" class="generate_attributes" compatibility="6.5.002" expanded="true" height="76" name="Generate Attributes" width="90" x="715" y="30">

<list key="function_descriptions">

<parameter key="Rad_Lat" value="Latitude*(pi/180)"/>

<parameter key="Rad_Long" value="Longitude*(pi/180)"/>

<parameter key="Lat_Home" value="40.750938"/>

<parameter key="Long_Home" value="-73.991594"/>

<parameter key="Rad_Lat_Home" value="Lat_Home*(pi/180)"/>

<parameter key="Rad_Long_Home" value="Long_Home*(pi/180)"/>

<parameter key="Rad_Diff_Lat" value="(Latitude-Lat_Home)*(pi/180)"/>

<parameter key="Rad_Diff_Long" value="(Longitude-Long_Home)*(pi/180)"/>

<parameter key="a" value="(sin(Rad_Diff_Lat/2))^2 + cos(Rad_Lat) * cos(Rad_Lat_Home) * (sin(Rad_Diff_Long/2))^2"/>

<parameter key="c" value="2 * atan2(sqrt(a), sqrt(1-a) )"/>

<parameter key="distance_km" value="(6371000*c)/1000"/>

<parameter key="distance_miles" value="distance_km*0.621371"/>

</list>

<description align="center" color="transparent" colored="false" width="126">Make lots of calculations<br/></description>

</operator>

<connect from_op="Open File" from_port="file" to_op="Read CSV" to_port="file"/>

<connect from_op="Read CSV" from_port="output" to_op="Select Attributes" to_port="example set input"/>

<connect from_op="Select Attributes" from_port="example set output" to_op="Filter Examples" to_port="example set input"/>

<connect from_op="Filter Examples" from_port="example set output" to_op="Rename" to_port="example set input"/>

<connect from_op="Rename" from_port="example set output" to_op="Generate Attributes" to_port="example set input"/>

<connect from_op="Generate Attributes" from_port="example set output" to_port="result 1"/>

<portSpacing port="source_input 1" spacing="0"/>

<portSpacing port="sink_result 1" spacing="0"/>

<portSpacing port="sink_result 2" spacing="0"/>

</process>

</operator>

</process>

Here's the python process:

#!/usr/bin/python

import pandas as pd

from geopy.geocoders import Nominatim

from geopy.distance import great_circle

geolocator = Nominatim()

location = geolocator.geocode("1 Penn Plaza, NY, NY")

home = (location.latitude, location.longitude) #Set Home Location

earthquake = pd.read_csv('https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/2.5_day.csv') #Read CSV file

selection = (earthquake['mag'] >= 4) #Filter for earthquakes > mag 4

earthquake = earthquake[selection].dropna(how = 'any', subset = ['latitude', 'longitude']).drop(['time', 'depth', 'mag', 'magType', 'nst','gap','dmin', 'rms','net', 'id','updated','place','type'], axis=1)

earthquake = earthquake.convert_objects(convert_numeric=True)

earthquake.describe(include='all') #not necessary but I like to see a description of the data I'm pushing downstream

earthquake['combined'] = zip(earthquake.latitude, earthquake.longitude) #create tuple from pandas dataframe

print earthquake.combined #double check the list

print [great_circle(home, (lt,lng)) for (lt,lng) in earthquake.combined] #brackets are a short form of loop

Mean Reversion Trading in RapidMiner

Lately, I've been thinking about becoming more active in trading again. I

was reviewing some strategies and decided to recreate a mean reversion

trading process in RapidMiner. I found a mean reversion

trading strategy

that uses Python here and just recreated it in RapidMiner.

The Process

The process is quite simple. You do the following:

- Load in stock quote data via CSV;

- Calculate daily returns;

- Calculate a 20 day moving average;

- Calculate a rolling 90 day standard deviation;

- Generate Trading Criteria per the article;

- Wrap it all together and look at the Buy vs Hold and Buy Signals.

Mind you, this doesn't include commission costs and slippage. I suspect

that once I add that in, the Buy and Hold strategy will be the best.

<?xml version="1.0" encoding="UTF-8"?><process version="8.1.001">

<context>

<input/>

<output/>

<macros/>

</context>

<operator activated="true" class="process" compatibility="8.1.001" expanded="true" name="Process">

<parameter key="encoding" value="SYSTEM"/>

<process expanded="true">

<operator activated="true" class="subprocess" compatibility="8.1.001" expanded="true" height="82" name="Load Data" width="90" x="45" y="34">

<process expanded="true">

<operator activated="true" class="read_csv" compatibility="8.1.000" expanded="true" height="68" name="Read Downloaded S&P500" width="90" x="45" y="34">

<parameter key="csv_file" value="C:\Users\TomOtt\Downloads\INTC.csv"/>

<parameter key="column_separators" value=","/>

<parameter key="first_row_as_names" value="false"/>

<list key="annotations">

<parameter key="0" value="Name"/>

</list>

<parameter key="encoding" value="windows-1252"/>

<list key="data_set_meta_data_information">

<parameter key="0" value="Date.true.polynominal.attribute"/>

<parameter key="1" value="Open.true.real.attribute"/>

<parameter key="2" value="High.true.real.attribute"/>

<parameter key="3" value="Low.true.real.attribute"/>

<parameter key="4" value="Close.true.real.attribute"/>

<parameter key="5" value="Adj Close.true.real.attribute"/>

<parameter key="6" value="Volume.true.real.attribute"/>

</list>

<description align="center" color="transparent" colored="false" width="126">Due to Yahoo changes, must download CSV file manually for now!</description>

</operator>

<operator activated="true" class="series:lag_series" compatibility="7.4.000" expanded="true" height="82" name="Lag Series" width="90" x="179" y="34">

<list key="attributes">

<parameter key="Close" value="1"/>

</list>

</operator>

<operator activated="true" class="generate_attributes" compatibility="8.1.001" expanded="true" height="82" name="Returns" width="90" x="313" y="34">

<list key="function_descriptions">

<parameter key="Returns" value="(Close-Open)/Open"/>

</list>

</operator>

<operator activated="true" class="select_attributes" compatibility="8.1.001" expanded="true" height="82" name="Select Attributes for ETL" width="90" x="447" y="34">

<parameter key="attribute_filter_type" value="single"/>

<parameter key="attribute" value="^GSPC_CLOSE-1"/>

<parameter key="invert_selection" value="true"/>

</operator>

<operator activated="true" class="series:moving_average" compatibility="7.4.000" expanded="true" height="82" name="MA 20 DAY" width="90" x="581" y="34">

<parameter key="attribute_name" value="Close"/>

<parameter key="window_width" value="20"/>

</operator>

<operator activated="true" class="series:windowing" compatibility="7.4.000" expanded="true" height="82" name="Window for 90 Day STDEV" width="90" x="715" y="34">

<parameter key="window_size" value="90"/>

<parameter key="label_attribute" value="Close"/>

</operator>

<operator activated="true" class="generate_aggregation" compatibility="8.1.001" expanded="true" height="82" name="Calc STD Dev for 90 day window" width="90" x="849" y="34">

<parameter key="attribute_name" value="StDev"/>

<parameter key="attribute_filter_type" value="regular_expression"/>

<parameter key="attributes" value="Log Returns-0|Log Returns-1|Log Returns-2|Log Returns-3|Log Returns-4"/>

<parameter key="regular_expression" value="Close\-[0-9]"/>

<parameter key="aggregation_function" value="standard_deviation"/>

</operator>

<operator activated="true" class="select_attributes" compatibility="8.1.001" expanded="true" height="82" name="Select Attributes" width="90" x="983" y="34">

<parameter key="attribute_filter_type" value="subset"/>

<parameter key="attributes" value="Close-0|Date-0|High-0|Low-0|Open-0|StDev|Volume-0|average(Close)-0|Log Returns-0|Returns-0"/>

</operator>

<operator activated="true" class="rename" compatibility="8.1.001" expanded="true" height="82" name="Rename a bunch of stuff" width="90" x="1117" y="34">

<parameter key="old_name" value="Date-0"/>

<parameter key="new_name" value="Date"/>

<list key="rename_additional_attributes">

<parameter key="Close-0" value="Close"/>

<parameter key="High-0" value="High"/>

<parameter key="Returns-0" value="Returns"/>

<parameter key="Volume-0" value="Volume"/>

<parameter key="Low-0" value="Low"/>

<parameter key="Open-0" value="Open"/>

<parameter key="average(Close)-0" value="MA20"/>

<parameter key="StDev" value="90daySTDEV"/>

</list>

</operator>

<operator activated="true" class="nominal_to_date" compatibility="8.1.001" expanded="true" height="82" name="Convert dates" width="90" x="1251" y="34">

<parameter key="attribute_name" value="Date"/>

<parameter key="date_format" value="yyyy-MM-dd"/>

</operator>

<operator activated="true" class="series:lag_series" compatibility="7.4.000" expanded="true" height="82" name="Lag Low" width="90" x="1385" y="34">

<list key="attributes">

<parameter key="Low" value="1"/>

</list>

</operator>

<operator activated="true" class="replace_missing_values" compatibility="8.1.001" expanded="true" height="103" name="Replace Missing Values" width="90" x="1519" y="34">

<parameter key="attribute_filter_type" value="value_type"/>

<parameter key="value_type" value="numeric"/>

<parameter key="default" value="zero"/>

<list key="columns"/>

</operator>

<connect from_op="Read Downloaded S&P500" from_port="output" to_op="Lag Series" to_port="example set input"/>

<connect from_op="Lag Series" from_port="example set output" to_op="Returns" to_port="example set input"/>

<connect from_op="Returns" from_port="example set output" to_op="Select Attributes for ETL" to_port="example set input"/>

<connect from_op="Select Attributes for ETL" from_port="example set output" to_op="MA 20 DAY" to_port="example set input"/>

<connect from_op="MA 20 DAY" from_port="example set output" to_op="Window for 90 Day STDEV" to_port="example set input"/>

<connect from_op="Window for 90 Day STDEV" from_port="example set output" to_op="Calc STD Dev for 90 day window" to_port="example set input"/>

<connect from_op="Calc STD Dev for 90 day window" from_port="example set output" to_op="Select Attributes" to_port="example set input"/>

<connect from_op="Select Attributes" from_port="example set output" to_op="Rename a bunch of stuff" to_port="example set input"/>

<connect from_op="Rename a bunch of stuff" from_port="example set output" to_op="Convert dates" to_port="example set input"/>

<connect from_op="Convert dates" from_port="example set output" to_op="Lag Low" to_port="example set input"/>

<connect from_op="Lag Low" from_port="example set output" to_op="Replace Missing Values" to_port="example set input"/>

<connect from_op="Replace Missing Values" from_port="example set output" to_port="out 1"/>

<portSpacing port="source_in 1" spacing="0"/>

<portSpacing port="sink_out 1" spacing="0"/>

<portSpacing port="sink_out 2" spacing="0"/>

<description align="center" color="yellow" colored="false" height="174" resized="false" width="180" x="24" y="231">The goal is to pull this data from a database in the future.<br/><br/>The database will be populated with stock data from the Nasdaq automatically overnight using EOD</description>

</process>

<description align="center" color="transparent" colored="false" width="126">Load CSV data</description>

</operator>

<operator activated="true" class="generate_attributes" compatibility="8.1.001" expanded="true" height="82" name="Generate Trading Signal" width="90" x="179" y="34">

<list key="function_descriptions">

<parameter key="Criteria1" value="if((Open<[Low-1]) && (Open-[Low-1])<-[90daySTDEV],1,0)"/>

<parameter key="Criteria2" value="if(Open>MA20,1,0)"/>

<parameter key="Signal" value="if(([Criteria1]+[Criteria2])==2,1,0)"/>

</list>

</operator>

<operator activated="true" class="select_attributes" compatibility="8.1.001" expanded="true" height="82" name="Select Final Col" width="90" x="313" y="34">

<parameter key="attribute_filter_type" value="subset"/>

<parameter key="attributes" value="Close|Date|Signal|Returns"/>

</operator>

<operator activated="true" class="multiply" compatibility="8.1.001" expanded="true" height="82" name="Multiply (2)" width="90" x="447" y="34"/>

<operator activated="true" class="subprocess" compatibility="8.1.001" expanded="true" height="103" name="Cum Sum of Returns" width="90" x="581" y="34">

<process expanded="true">

<operator activated="true" class="multiply" compatibility="8.1.001" expanded="true" height="103" name="Multiply (3)" width="90" x="112" y="34"/>

<operator activated="true" class="series:integrate_series" compatibility="7.4.000" expanded="true" height="82" name="All Signals" width="90" x="447" y="289">

<parameter key="attribute_name" value="Returns"/>

</operator>

<operator activated="true" class="filter_examples" compatibility="8.1.001" expanded="true" height="103" name="Filter Examples (2)" width="90" x="313" y="34">

<list key="filters_list">

<parameter key="filters_entry_key" value="Signal.eq.1"/>

</list>

</operator>

<operator activated="true" class="series:integrate_series" compatibility="7.4.000" expanded="true" height="82" name="Buy Signal Only" width="90" x="447" y="34">

<parameter key="attribute_name" value="Returns"/>

</operator>

<operator activated="true" class="rename" compatibility="8.1.001" expanded="true" height="82" name="Buy Returns" width="90" x="581" y="34">

<parameter key="old_name" value="cumulative(Returns)"/>

<parameter key="new_name" value="Buy Signals Returns"/>

<list key="rename_additional_attributes"/>

</operator>

<operator activated="true" class="rename" compatibility="8.1.001" expanded="true" height="82" name="Buy and Hold Returns" width="90" x="581" y="289">

<parameter key="old_name" value="cumulative(Returns)"/>

<parameter key="new_name" value="Buy and Hold"/>

<list key="rename_additional_attributes"/>

</operator>

<connect from_port="in 1" to_op="Multiply (3)" to_port="input"/>

<connect from_op="Multiply (3)" from_port="output 1" to_op="Filter Examples (2)" to_port="example set input"/>

<connect from_op="Multiply (3)" from_port="output 2" to_op="All Signals" to_port="example set input"/>

<connect from_op="All Signals" from_port="example set output" to_op="Buy and Hold Returns" to_port="example set input"/>

<connect from_op="Filter Examples (2)" from_port="example set output" to_op="Buy Signal Only" to_port="example set input"/>

<connect from_op="Buy Signal Only" from_port="example set output" to_op="Buy Returns" to_port="example set input"/>

<connect from_op="Buy Returns" from_port="example set output" to_port="out 1"/>

<connect from_op="Buy and Hold Returns" from_port="example set output" to_port="out 2"/>

<portSpacing port="source_in 1" spacing="0"/>

<portSpacing port="source_in 2" spacing="0"/>

<portSpacing port="sink_out 1" spacing="0"/>

<portSpacing port="sink_out 2" spacing="0"/>

<portSpacing port="sink_out 3" spacing="0"/>

<description align="center" color="yellow" colored="false" height="105" resized="false" width="180" x="337" y="147">Set the previous value to the missing value</description>

</process>

</operator>

<operator activated="false" class="subprocess" compatibility="8.1.001" expanded="true" height="103" name="Subprocess" width="90" x="581" y="289">

<process expanded="true">

<operator activated="true" class="series:windowing" compatibility="7.4.000" expanded="true" height="82" name="Window for Training" width="90" x="45" y="187">

<parameter key="window_size" value="1"/>

<parameter key="create_label" value="true"/>

<parameter key="label_attribute" value="Signal"/>

<parameter key="add_incomplete_windows" value="true"/>

</operator>

<operator activated="true" class="concurrency:optimize_parameters_grid" compatibility="8.1.001" expanded="true" height="124" name="Optimize Parameters (Grid)" width="90" x="179" y="34">

<list key="parameters">

<parameter key="Backtesting.test_window_width" value="[2;5;4;linear]"/>

<parameter key="Backtesting.training_window_step_size" value="[1;5;4;linear]"/>

<parameter key="Backtesting.training_window_width" value="[2;5;4;linear]"/>

<parameter key="SVM for HV Calc.kernel_gamma" value="[0.01;1000;5;logarithmic]"/>

<parameter key="SVM for HV Calc.C" value="[0;10000;2;linear]"/>

</list>

<process expanded="true">

<operator activated="true" class="series:sliding_window_validation" compatibility="7.4.000" expanded="true" height="124" name="Backtesting" width="90" x="179" y="34">

<parameter key="training_window_width" value="6"/>

<parameter key="test_window_width" value="6"/>

<parameter key="cumulative_training" value="true"/>

<process expanded="true">

<operator activated="true" class="support_vector_machine" compatibility="8.1.001" expanded="true" height="124" name="SVM for HV Calc" width="90" x="179" y="34">

<parameter key="kernel_type" value="radial"/>

<parameter key="kernel_gamma" value="0.10000000000000002"/>

<parameter key="C" value="200.0"/>

</operator>

<connect from_port="training" to_op="SVM for HV Calc" to_port="training set"/>

<connect from_op="SVM for HV Calc" from_port="model" to_port="model"/>

<portSpacing port="source_training" spacing="0"/>

<portSpacing port="sink_model" spacing="0"/>

<portSpacing port="sink_through 1" spacing="0"/>

</process>

<process expanded="true">

<operator activated="true" class="apply_model" compatibility="7.1.001" expanded="true" height="82" name="Apply Model In Testing" width="90" x="45" y="34">

<list key="application_parameters"/>

</operator>

<operator activated="true" class="series:forecasting_performance" compatibility="7.4.000" expanded="true" height="82" name="Forecast Performance" width="90" x="246" y="34">

<parameter key="horizon" value="1"/>

<parameter key="main_criterion" value="prediction_trend_accuracy"/>

</operator>

<connect from_port="model" to_op="Apply Model In Testing" to_port="model"/>

<connect from_port="test set" to_op="Apply Model In Testing" to_port="unlabelled data"/>

<connect from_op="Apply Model In Testing" from_port="labelled data" to_op="Forecast Performance" to_port="labelled data"/>

<connect from_op="Forecast Performance" from_port="performance" to_port="averagable 1"/>

<portSpacing port="source_model" spacing="0"/>

<portSpacing port="source_test set" spacing="0"/>

<portSpacing port="source_through 1" spacing="0"/>

<portSpacing port="sink_averagable 1" spacing="0"/>

<portSpacing port="sink_averagable 2" spacing="0"/>

</process>

</operator>

<connect from_port="input 1" to_op="Backtesting" to_port="training"/>

<connect from_op="Backtesting" from_port="model" to_port="model"/>

<connect from_op="Backtesting" from_port="averagable 1" to_port="performance"/>

<portSpacing port="source_input 1" spacing="0"/>

<portSpacing port="source_input 2" spacing="0"/>

<portSpacing port="sink_performance" spacing="0"/>

<portSpacing port="sink_model" spacing="0"/>

<portSpacing port="sink_output 1" spacing="0"/>

</process>

</operator>

<operator activated="true" class="series:windowing" compatibility="7.4.000" expanded="true" height="82" name="Window for Prediction" width="90" x="179" y="289">

<parameter key="window_size" value="1"/>

</operator>

<operator activated="true" class="apply_model" compatibility="7.1.001" expanded="true" height="82" name="Apply SVM model" width="90" x="380" y="136">

<list key="application_parameters"/>

</operator>

<connect from_port="in 1" to_op="Window for Training" to_port="example set input"/>

<connect from_op="Window for Training" from_port="example set output" to_op="Optimize Parameters (Grid)" to_port="input 1"/>

<connect from_op="Window for Training" from_port="original" to_op="Window for Prediction" to_port="example set input"/>

<connect from_op="Optimize Parameters (Grid)" from_port="performance" to_port="out 1"/>